Opinion

Red Deer can be more than a one-industry town afraid to diversify.

30 years ago, if you had asked me, I would have told you that Red Deer was a vibrant growth community, the commercial center for central Alberta on the leading edge of diversification. What happened? We got complacent, we got spoiled and we focused on but a single industry.

We accepted a boom/bust cyclical work force.

We thought of ourselves as industrious and innovative. Our parents were that way on the farm and we took that can-do attitude to the oil patch. First it was during the off season to supplement farm income, then we outgrew the farm and we bought bigger and fancier things for ourselves.

Houses got bigger as did our cars and toys but our families got smaller.

The busts were tolerated and during these portions of the cycle, we talked of diversifying our economy but the big bucks were still to be had in the oil patch.

Our children went to school and after graduation they drifted away to more secure albeit less remunerated careers.

I asked some former Albertans why not move back to Alberta if you can work remotely and I was told that they still need to socialize with their peers. Coming back to Alberta, they would lose their sense of worldly consciousness, back to the back woods philosophy and politics. They would lose that cosmopolitan feel and the freedom to talk openly about issues and politics.

One woman had mentioned that she grew up and got her education in Alberta, but it wasn’t until she left Alberta that she saw the opportunities and possibilities. It was like a one-way street turned into Main Street.

Today, I get frustrated as many leaders hold that waiting position for the next boom, they justify it with; “it is just taking longer this time“. We are building new homes almost 10 times faster than our population growth. More property taxes for the city just not as many new tax payers.

We are always building new neighbourhoods, even when our population decreased. We are building new neighbourhoods, even when some former new neighbourhoods, lay near empty. We could not build facilities for the citizens during the boom times because we were building new neighbourhoods.

We could not build a 50 meter pool during boom times because the prices were too high, trades were scarce due to the oil patch. We can’t build a 50meter pool now because we cannot afford the estimates given during boom times. We need funds to build new neighbourhoods.

Red Deer does have to be just a one-industry town losing it’s industry. Waiting for hand outs from other levels of government, and waiting for the next boom. Besides if we do get one more boom, then what?

History has stories of places that failed due to the collapse of their one-industry. Forestry, coal, fisheries, steel, iron, manufacturing, tobacco, asbestos, mining, even agriculture are ones that pop into my head.

We should study the places that succeeded. Those with little or no resources that became commercial successes.

Nah, we should just wait, I am sure the provincial government will give us all the cash we need. NOT.

I believe we need to embrace the new economy, and if the boom does come along it will be a bonus. Don’t you agree?

Be nice if the kids could , better yet want to move to Red Deer. There are superstars in other industries that once called Red Deer home. They could lead the diversification charge.

Business

Socialism vs. Capitalism

People criticize capitalism. A recent Axios-Generation poll says, “College students prefer socialism to capitalism.”

Why?

Because they believe absurd myths. Like the claim that the Soviet Union “wasn’t real socialism.”

Socialism guru Noam Chomsky tells students that. He says the Soviet Union “was about as remote from socialism as you could imagine.”

Give me a break.

The Soviets made private business illegal.

If that’s not socialism, I’m not sure what is.

“Socialism means abolishing private property and … replacing it with some form of collective ownership,” explains economist Ben Powell. “The Soviet Union had an abundance of that.”

Socialism always fails. Look at Venezuela, the richest country in Latin America about 40 years ago. Now people there face food shortages, poverty, misery and election outcomes the regime ignores.

But Al Jazeera claims Venezuela’s failure has “little to do with socialism, and a lot to do with poor governance … economic policies have failed to adjust to reality.”

“That’s the nature of socialism!” exclaims Powell. “Economic policies fail to adjust to reality. Economic reality evolves every day. Millions of decentralized entrepreneurs and consumers make fine tuning adjustments.”

Political leaders can’t keep up with that.

Still, pundits and politicians tell people, socialism does work — in Scandinavia.

“Mad Money’s Jim Cramer calls Norway “as socialist as they come!”

This too is nonsense.

“Sweden isn’t socialist,” says Powell. “Volvo is a private company. Restaurants, hotels, they’re privately owned.”

Norway, Denmark and Sweden are all free market economies.

Denmark’s former prime minister was so annoyed with economically ignorant Americans like Bernie Sanders calling Scandanavia “socialist,” he came to America to tell Harvard students that his country “is far from a socialist planned economy. Denmark is a market economy.”

Powell says young people “hear the preaching of socialism, about equality, but they don’t look on what it actually delivers: poverty, starvation, early death.”

For thousands of years, the world had almost no wealth creation. Then, some countries tried capitalism. That changed everything.

“In the last 20 years, we’ve seen more humans escape extreme poverty than any other time in human history, and that’s because of markets,” says Powell.

Capitalism makes poor people richer.

Former Rep. Jamaal Bowman (D-N.Y.) calls capitalism “slavery by another name.”

Rep. Alexandria Ocasio-Cortez (D-N.Y.) claims, “No one ever makes a billion dollars. You take a billion dollars.”

That’s another myth.

People think there’s a fixed amount of money. So when someone gets rich, others lose.

But it’s not true. In a free market, the only way entrepreneurs can get rich is by creating new wealth.

Yes, Steve Jobs pocketed billions, but by creating Apple, he gave the rest of us even more. He invented technology that makes all of us better off.

“I hope that we get 100 new super billionaires,” says economist Dan Mitchell, “because that means 100 new people figured out ways to make the rest of our lives better off.”

Former Labor Secretary Robert Reich advocates the opposite: “Let’s abolish billionaires,” he says.

He misses the most important fact about capitalism: it’s voluntary.

“I’m not giving Jeff Bezos any money unless he’s selling me something that I value more than that money,” says Mitchell.

It’s why under capitalism, the poor and middle class get richer, too.

“The economic pie grows,” says Mitchell. “We are much richer than our grandparents.”

When the media say the “middle class is in decline,” they’re technically right, but they don’t understand why it’s shrinking.

“It’s shrinking because more and more people are moving into upper income quintiles,” says Mitchell. “The rich get richer in a capitalist society. But guess what? The rest of us get richer as well.”

I cover more myths about socialism and capitalism in my new video.

Alberta

Alberta project would be “the biggest carbon capture and storage project in the world”

Pathways Alliance CEO Kendall Dilling is interviewed at the World Petroleum Congress in Calgary, Monday, Sept. 18, 2023.THE CANADIAN PRESS/Jeff McIntosh

From Resource Works

Carbon capture gives biggest bang for carbon tax buck CCS much cheaper than fuel switching: report

Canada’s climate change strategy is now joined at the hip to a pipeline. Two pipelines, actually — one for oil, one for carbon dioxide.

The MOU signed between Ottawa and Alberta two weeks ago ties a new oil pipeline to the Pathways Alliance, which includes what has been billed as the largest carbon capture proposal in the world.

One cannot proceed without the other. It’s quite possible neither will proceed.

The timing for multi-billion dollar carbon capture projects in general may be off, given the retreat we are now seeing from industry and government on decarbonization, especially in the U.S., our biggest energy customer and competitor.

But if the public, industry and our governments still think getting Canada’s GHG emissions down is a priority, decarbonizing Alberta oil, gas and heavy industry through CCS promises to be the most cost-effective technology approach.

New modelling by Clean Prosperity, a climate policy organization, finds large-scale carbon capture gets the biggest bang for the carbon tax buck.

Which makes sense. If oil and gas production in Alberta is Canada’s single largest emitter of CO2 and methane, it stands to reason that methane abatement and sequestering CO2 from oil and gas production is where the biggest gains are to be had.

A number of CCS projects are already in operation in Alberta, including Shell’s Quest project, which captures about 1 million tonnes of CO2 annually from the Scotford upgrader.

What is CO2 worth?

Clean Prosperity estimates industrial carbon pricing of $130 to $150 per tonne in Alberta and CCS could result in $90 billion in investment and 70 megatons (MT) annually of GHG abatement or sequestration. The lion’s share of that would come from CCS.

To put that in perspective, 70 MT is 10% of Canada’s total GHG emissions (694 MT).

The report cautions that these estimates are “hypothetical” and gives no timelines.

All of the main policy tools recommended by Clean Prosperity to achieve these GHG reductions are contained in the Ottawa-Alberta MOU.

One important policy in the MOU includes enhanced oil recovery (EOR), in which CO2 is injected into older conventional oil wells to increase output. While this increases oil production, it also sequesters large amounts of CO2.

Under Trudeau era policies, EOR was excluded from federal CCS tax credits. The MOU extends credits and other incentives to EOR, which improves the value proposition for carbon capture.

Under the MOU, Alberta agrees to raise its industrial carbon pricing from the current $95 per tonne to a minimum of $130 per tonne under its TIER system (Technology Innovation and Emission Reduction).

The biggest bang for the buck

Using a price of $130 to $150 per tonne, Clean Prosperity looked at two main pathways to GHG reductions: fuel switching in the power sector and CCS.

Fuel switching would involve replacing natural gas power generation with renewables, nuclear power, renewable natural gas or hydrogen.

“We calculated that fuel switching is more expensive,” Brendan Frank, director of policy and strategy for Clean Prosperity, told me.

Achieving the same GHG reductions through fuel switching would require industrial carbon prices of $300 to $1,000 per tonne, Frank said.

Clean Prosperity looked at five big sectoral emitters: oil and gas extraction, chemical manufacturing, pipeline transportation, petroleum refining, and cement manufacturing.

“We find that CCUS represents the largest opportunity for meaningful, cost-effective emissions reductions across five sectors,” the report states.

Fuel switching requires higher carbon prices than CCUS.

Measures like energy efficiency and methane abatement are included in Clean Prosperity’s calculations, but again CCS takes the biggest bite out of Alberta’s GHGs.

“Efficiency and (methane) abatement are a portion of it, but it’s a fairly small slice,” Frank said. “The overwhelming majority of it is in carbon capture.”

From left, Alberta Minister of Energy Marg McCuaig-Boyd, Shell Canada President Lorraine Mitchelmore, CEO of Royal Dutch Shell Ben van Beurden, Marathon Oil Executive Brian Maynard, Shell ER Manager, Stephen Velthuizen, and British High Commissioner to Canada Howard Drake open the valve to the Quest carbon capture and storage facility in Fort Saskatchewan Alta, on Friday November 6, 2015. Quest is designed to capture and safely store more than one million tonnes of CO2 each year an equivalent to the emissions from about 250,000 cars. THE CANADIAN PRESS/Jason Franson

Credit where credit is due

Setting an industrial carbon price is one thing. Putting it into effect through a workable carbon credit market is another.

“A high headline price is meaningless without higher credit prices,” the report states.

“TIER credit prices have declined steadily since 2023 and traded below $20 per tonne as of November 2025. With credit prices this low, the $95 per tonne headline price has a negligible effect on investment decisions and carbon markets will not drive CCUS deployment or fuel switching.”

Clean Prosperity recommends a kind of government-backstopped insurance mechanism guaranteeing carbon credit prices, which could otherwise be vulnerable to political and market vagaries.

Specifically, it recommends carbon contracts for difference (CCfD).

“A straight-forward way to think about it is insurance,” Frank explains.

Carbon credit prices are vulnerable to risks, including “stroke-of-pen risks,” in which governments change or cancel price schedules. There are also market risks.

CCfDs are contractual agreements between the private sector and government that guarantees a specific credit value over a specified time period.

“The private actor basically has insurance that the credits they’ll generate, as a result of making whatever low-carbon investment they’re after, will get a certain amount of revenue,” Frank said. “That certainty is enough to, in our view, unlock a lot of these projects.”

From the perspective of Canadian CCS equipment manufacturers like Vancouver’s Svante, there is one policy piece still missing from the MOU: eligibility for the Clean Technology Manufacturing (CTM) Investment tax credit.

“Carbon capture was left out of that,” said Svante co-founder Brett Henkel said.

Svante recently built a major manufacturing plant in Burnaby for its carbon capture filters and machines, with many of its prospective customers expected to be in the U.S.

The $20 billion Pathways project could be a huge boon for Canadian companies like Svante and Calgary’s Entropy. But there is fear Canadian CCS equipment manufacturers could be shut out of the project.

“If the oil sands companies put out for a bid all this equipment that’s needed, it is highly likely that a lot of that equipment is sourced outside of Canada, because the support for Canadian manufacturing is not there,” Henkel said.

Henkel hopes to see CCS manufacturing added to the eligibility for the CTM investment tax credit.

“To really build this eco-system in Canada and to support the Pathways Alliance project, we need that amendment to happen.”

Resource Works News

-

Business22 hours ago

Business22 hours agoICYMI: Largest fraud in US history? Independent Journalist visits numerous daycare centres with no children, revealing massive scam

-

Alberta14 hours ago

Alberta14 hours agoAlberta project would be “the biggest carbon capture and storage project in the world”

-

Energy11 hours ago

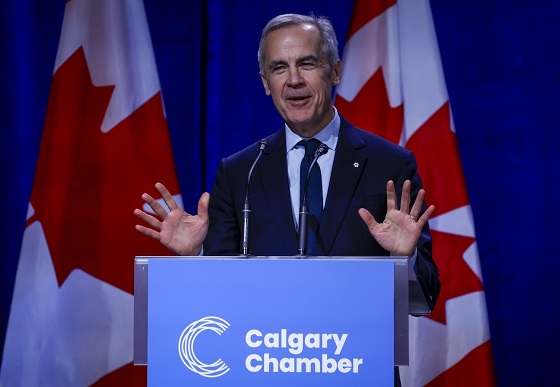

Energy11 hours agoCanada’s debate on energy levelled up in 2025

-

Business12 hours ago

Business12 hours agoSocialism vs. Capitalism

-

Energy13 hours ago

Energy13 hours agoNew Poll Shows Ontarians See Oil & Gas as Key to Jobs, Economy, and Trade

-

International2 days ago

International2 days agoChina Stages Massive Live-Fire Encirclement Drill Around Taiwan as Washington and Japan Fortify

-

Energy2 days ago

Energy2 days agoRulings could affect energy prices everywhere: Climate activists v. the energy industry in 2026

-

Digital ID2 days ago

Digital ID2 days agoThe Global Push for Government Mandated Digital IDs And Why You Should Worry