Censorship Industrial Complex

Internet censorship laws lead a majority of Canadians to believe free speech is threatened: poll

From LifeSiteNews

In light of the barrage of new internet censorship laws being passed or brought forth by the federal government of Prime Minister Justin Trudeau, a new survey revealed that the majority of Canadians feel their freedom of speech is under attack.

According to results from a Leger survey conducted April 26-28 that sampled responses from 1,610 Canadians, 57 percent think their freedom of speech is being threatened, with 36 percent not believing this to be true.

Not surprisingly, those with conservative voting intentions, about 76 percent, were the most likely to feel that their free speech is under attack, with 70 percent of the same group as well as those over 55, feeling that Canada is not as free as before.

The survey results also show that 62 percent of Canadians think it is “tougher to voice their opinion in their country, while 27% think it is easier.”

“Conservative voters (70%) and Canadians aged 55 or older (70%) are more likely to think that it is tougher now to express their opinion,” Leger noted in its survey.

Not surprisingly, Liberal voters were the most supportive of placing limits on free speech, with 64 percent agreeing with the following: “There should be limits on freedom of speech to ensure that things such as hate speech, speeches preaching a form of intolerance, or speeches against democracy be prevented from reaching the public.”

The survey also revealed that about one of four conservative voters believe that their views are not socially acceptable.

Sixty percent of conservative voters said that free speech should never be limited in any manner and that one should be able to express their opinions publicly without issue.

Regarding their reasons for free speech being under attack, 11 percent blamed politicians causing more hate, with eight percent saying “right-wing” extremists were to blame, with seven percent blaming woke-minded thinking as the issue. Twenty-nine percent of Canadians felt that a growing lack of respect is to blame, and 13 percent thought it is due to “a degradation of the moral fibre in the country.”

A bit concerningly, only six of 10 Canadians have confidence that the next federal election, scheduled for 2025, will be “free and fair,” with 29 percent saying outright they are “not confident.”

When it comes to internet censorship laws, the most recent one introduced in the House of Commons is a federal government bill that could lead to large fines or jail time for vaguely defined online “hate speech” infractions under Liberal Minster Attorney General Arif Virani’s Bill C-63, or Online Harms Act.

LifeSiteNews recently reported how well-known Canadian psychologist Jordan Peterson and Queen’s University law professor Bruce Pardy blasted Trudeau and his government over Bill C-63.

Peterson noted that in his view, Bill C-63 is “designed … to produce a more general regime for online policing.”

“To me, that’s what it looks like,” he said.

Two other Trudeau bills dealing with freedom on the internet have become law, the first being Bill C-11 or the Online Streaming Act that mandates Canada’s broadcast regulator, the Canadian Radio-television and Telecommunications Commission (CRTC), oversee regulating online content on platforms such as YouTube and Netflix to ensure that such platforms are promoting content in accordance with a variety of its guidelines.

Trudeau’s other internet censorship law, the Online News Act, was passed by the Senate in June 2023.

The law mandates that Big Tech companies pay to publish Canadian content on their platforms. As a result, Meta, the parent company of Facebook and Instagram, blocked all access to news content in Canada. Google has promised to do the same rather than pay the fees laid out in the new legislation.

Critics of recent laws such as tech mogul Elon Musk have said it shows “Trudeau is trying to crush free speech in Canada.”

Business

Ted Cruz, Jim Jordan Ramp Up Pressure On Google Parent Company To Deal With ‘Censorship’

From the Daily Caller News Foundation

By Andi Shae Napier

Republican Texas Sen. Ted Cruz and Republican Ohio Rep. Jim Jordan are turning their attention to Google over concerns that the tech giant is censoring users and infringing on Americans’ free speech rights.

Google’s parent company Alphabet, which also owns YouTube, appears to be the GOP’s next Big Tech target. Lawmakers seem to be turning their attention to Alphabet after Mark Zuckerberg’s Meta ended its controversial fact-checking program in favor of a Community Notes system similar to the one used by Elon Musk’s X.

Cruz recently informed reporters of his and fellow senators’ plans to protect free speech.

Dear Readers:

As a nonprofit, we are dependent on the generosity of our readers.

Please consider making a small donation of any amount here. Thank you!

“Stopping online censorship is a major priority for the Commerce Committee,” Cruz said, as reported by Politico. “And we are going to utilize every point of leverage we have to protect free speech online.”

Following his meeting with Alphabet CEO Sundar Pichai last month, Cruz told the outlet, “Big Tech censorship was the single most important topic.”

Jordan, Chairman of the House Judiciary Committee, sent subpoenas to Alphabet and other tech giants such as Rumble, TikTok and Apple in February regarding “compliance with foreign censorship laws, regulations, judicial orders, or other government-initiated efforts” with the intent to discover how foreign governments, or the Biden administration, have limited Americans’ access to free speech.

“Throughout the previous Congress, the Committee expressed concern over YouTube’s censorship of conservatives and political speech,” Jordan wrote in a letter to Pichai in March. “To develop effective legislation, such as the possible enactment of new statutory limits on the executive branch’s ability to work with Big Tech to restrict the circulation of content and deplatform users, the Committee must first understand how and to what extent the executive branch coerced and colluded with companies and other intermediaries to censor speech.”

Jordan subpoenaed tech CEOs in 2023 as well, including Satya Nadella of Microsoft, Tim Cook of Apple and Pichai, among others.

Despite the recent action against the tech giant, the battle stretches back to President Donald Trump’s first administration. Cruz began his investigation of Google in 2019 when he questioned Karan Bhatia, the company’s Vice President for Government Affairs & Public Policy at the time, in a Senate Judiciary Committee hearing. Cruz brought forth a presentation suggesting tech companies, including Google, were straying from free speech and leaning towards censorship.

Even during Congress’ recess, pressure on Google continues to mount as a federal court ruled Thursday that Google’s ad-tech unit violates U.S. antitrust laws and creates an illegal monopoly. This marks the second antitrust ruling against the tech giant as a different court ruled in 2024 that Google abused its dominance of the online search market.

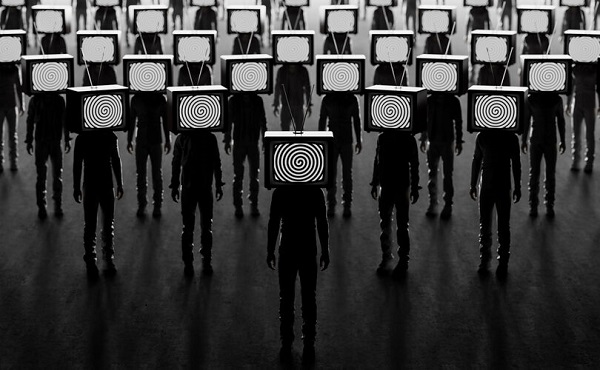

Censorship Industrial Complex

CIA mind control never ended – it evolved and went mainstream

From LifeSiteNews

From the CIA’s MKUltra to Britain’s COVID fear tactics, governments have spent decades perfecting psychological operations against their own people.

Surveying the battlefield

For thousands of years, military strategists have understood that an army’s success often depends not on its size or even on its armaments but on its knowledge of the opponent.

After all, as Sun Tzu observes:

If you know the enemy and know yourself, you need not fear the result of a hundred battles. If you know yourself but not the enemy, for every victory gained you will also suffer a defeat. If you know neither the enemy nor yourself, you will succumb in every battle.

It follows, then, that the success of the globalists in their fifth-generation war on us all depends on their knowledge of humanity itself.

What makes people tick? What motivates and demotivates them? What stimuli do they respond to, and in what way do they respond?

From the viewpoint of those wishing to manipulate, control and subdue humanity, the knowledge of the human mind that can be gleaned from the answers to these questions is the most prized knowledge of all.

So, it shouldn’t be surprising to learn that not only scientific researchers but military planners and government officials have spent centuries trying to better understand humans and their behaviors – and, more importantly, how to mold, influence, shape, or outright control those behaviors.

Everyone knows about Ivan Pavlov’s experiments in conditioning. Any high schooler could tell you how Pavlov was able to condition dogs to salivate upon hearing the ringing of a dinner bell.

But how many know that Pavlov’s research didn’t end with his observation of canines? That he next began duplicating his experiments on human subjects? That those human experiments saw Pavlov and his protégé, Nikoli Krasnogorsky, scooping orphans off the streets, drugging them, surgically fitting them with salivation monitors and force-feeding them food so that these children, like Pavlov’s dogs, could be trained to salivate on command?

READ: Is the US Intelligence Community hiding secret weapons from the American public?

How many are familiar with the experimenters who followed in Pavlov’s footsteps? How many have seen the footage of John B. Watson’s “Little Albert” experiments, where the psychologist deliberately traumatized an 11-month-old baby in an attempt to refine the techniques of conditioning humans?

How many have read Watson himself bragging that “[a]fter conditioning, even the sight of the long whiskers of a Santa Claus mask sends the youngster scuttling away, crying and shaking his head from side to side”?

How many have followed the thread from Pavlov and Watson and the “classical conditioning” researchers to the “radical behaviorists” like B. F. Skinner and his work in perfecting operant conditioning?

How many have read Skinner’s Walden Two, in which he proposes a scheme for creating a utopian society by conditioning children from birth to assume specific roles in society?

By this point, it’s fairly common knowledge that the CIA conducted mind control experiments like Project MKUltra, using operatives like Sidney Gottlieb and Dr. Ewan Cameron to administer LSD to unwitting subjects and conduct other ghoulish experiments in mental manipulation. But how many have heard of MKSearch or MKChickwit or MKOften or any of the other spin-offs of this nightmarish research?

How many know these experiments “were designed to destabilize human personality by creating behavior disturbances, altered sex patterns, aberrant behavior using sensory deprivation and various powerful stress-producing chemicals, and mind-altering substances” and were carried out on so-called “expendables” – i.e., “people whose death or disappearance would arouse no suspicion”?

How many have heard of George Brock Chisholm, who served as the first Director-General of the World Health Organization and helped spearhead the World Federation for Mental Health? How many have read the transcript of his 1945 lecture, “The Reestablishment of Peacetime Psychiatry,” in which he declared, “If the race is to be freed from its crippling burden of good and evil it must be psychiatrists who take the original responsibility”?

And how many are aware that Chisholm’s call to action was heeded by men like British military psychiatrist Colonel John Rawlings Rees, the first president of Chisholm’s World Federation of Mental Health and chair of the infamous Tavistock Institute from 1933 to 1947?

How many know the story of how Dr. Jim Mitchell – a military retiree and psychologist who had contracted to provide training services to the CIA – took the findings of Dr. Martin Seligman on the psychological phenomenon of “learned helplessness” and weaponized them for the CIA in the service of the agency’s post-9/11 illegal torture program?

Whether the general public is aware of this documented history or not, the record shows that the last 125 years of research into the human psyche has been conducted – or at least weaponized – by Machiavellian manipulators and secret schemers whose intent is to socially engineer the masses.

And, as the science of the mind progresses in the 21st century, these social engineering schemes are only getting more effective.

The information war

The alternative media has certainly had cause to note that we here in the 21st century are the (largely unwitting) targets of a large-scale information war. This war is being waged upon us largely (though not exclusively) by our own governments.

Occasionally, stories of some of the campaigns in this war break through the information blockade, and the public catches a glimpse of the battle that is being waged against them on all fronts.

Bemused Canadians, for example, were able to read about the Canadian military’s bizarre “wolf letter” psyop in the pages of The Ottawa Citizen back in 2021. But any concerns that might have been raised by this psyop and its wild story of forged government letters and recorded wolf noises were soon quelled by the usual establishment lapdog journalists.

The whole thing, we were told, was caused by “a handful of military reservists testing psychological tactics at a weekend exercise” and “new control measures are now in place to ensure psychological operation exercises and influence activities do not reach unintended audiences” – so, obviously there’s nothing more to worry about!

Residents of the U.K., meanwhile, got their own glimpse of the infowar in 2021 when members of the Scientific Pandemic Influenza Group on Behaviour (SPI-B) – a group providing “independent, expert, social and behavioural science advice” to the U.K. government – admitted they were guilty of “using fear as a means of control.”

Tasked with providing insight on how to make Britons compliant with their government’s lockdown, social distancing, masking, and other restrictions at the beginning of the scamdemic, the SPI-B experts urged the government to increase “the perceived level of personal threat” from COVID-19. Multiple members of the SPI-B team later expressed regret about the scheme, calling it “totalitarian” and unethical.

One SPI-B member confessed: “You could call psychology ‘mind control.’ That’s what we do.”

Another put it even more bluntly: “Without a vaccine, psychology is your main weapon … Psychology has had a really good epidemic, actually.”

But to the extent that these operations ever come to public light, it is almost always in disconnected and decontextualized stories like these. Those Canadians who learned about the “wolf letter” psyop, for example, likely never read about the SPI-B scamdemic psyop, let alone connected the events together as evidence of the all-out infowar.

In recent years, however, the existence of the infowar has not only become undeniable. It is undenied.

The cognitive domain of the information battlespace

In 2022, the Associated Press published “‘Pre-bunking’ shows promise in fight against misinformation,” an article touting new research that claims to show progress in the creation of new weapons in the information war.

After detailing the usual examples of the scourge of “misinformation” – i.e., observations that erode public faith in “democratic institutions, journalism and science” – the article then reports uncritically on new techniques that are being developed to trick the public into once again trusting these demonstrably untrustworthy institutions:

New findings from university researchers and Google, however, reveal that one of the most promising responses to misinformation may also be one of the simplest.

In a paper published Wednesday in the journal Science Advances, the researchers detail how short online videos that teach basic critical thinking skills can make people better able to resist misinformation.

The researchers created a series of videos similar to a public service announcement that focused on specific misinformation techniques – characteristics seen in many common false claims that include emotionally charged language, personal attacks or false comparisons between two unrelated items.

Researchers then gave people a series of claims and found that those who watched the videos were significantly better at distinguishing false information from accurate information.

Although research like that touted by the AP is ostensibly civilian in nature, the fact that this information campaign is part of a literal military battle that is being waged against us is now starting to be admitted, as well.

In 2023, for example, the Japanese military officially added the “cognitive domain” as the latest new battle domain added to Japan’s National Defense Program Guideline. In addition to the traditional domains of territorial land, water, and airspace, and to newly added domains like space, cyberspace, and the electrogmagnetic domain, Japan’s defense authorities now claim the cognitive space as part of their remit.

According to The Global Times:

The building of such cognitive capability would also be written into the National Security Strategy, one of the three major diplomatic and security documents to be amended before the end of 2022, VOA Chinese reported, citing the theory that the Japanese defense authorities and the Self-Defense Force attach great importance to the “misinformation” released by Russia and China, consider that information spread in the Chinese language is a global trend and that cognitive warfare by the island of Taiwan against the Chinese mainland provides valuable experience for research and study.

[…]

Analysts said that cognitive warfare is a combination of digital information, media and spy technology that leads public opinion to extremes in order to affect the basis of diplomacy between countries and to realize the goals of political manipulation, citing the U.S.’ infamous “peaceful transfer of power” strategy in other countries as an example.

The recognition of the “cognitive domain” as a literal battlefield is not limited to the Japanese defense forces, however.

In 2019, the Chinese State Council Information Office released a white paper on “China’s National Defense in the New Era,” arguing that “[w]ar is evolving in form towards informationized [sic] warfare, and intelligent warfare is on the horizon.”

In 2022, Motohiro Tsuchiya, a professor at Keio University, wrote an article on “Governing Cognitive Warfare” for Governing the Global Commons: Challenges and Opportunities for US-Japan Cooperation (a publication of the German Marshall Fund of the United States!) in which he warned that the threat of “intelligentized warfare” by China and other U.S. State Department bogeymen necessitated U.S. cooperation to “create and promote rules and norms that can effectively govern cyberwarfare.”

And, perhaps inevitably, it wasn’t long before it was discovered that the real threat in this new “cognitive domain” isn’t the ChiComs or the CRINKs or any other outside force, but… *drumroll, please*… online conspiracy theorists!

That’s right, in 2023, Tomoko Nagasako, a research fellow at The Sasakawa Peace Foundation, penned “The Threat of Conspiracy Theories in the Battle for the Cognitive Domain – A Consideration of the Status of Conspiracy Theories in Japan Based on Attempts at Regime Destruction Overseas.” As you might guess from that title, the article provides “an overview of the state of conspiracy theories overseas and in Japan,” details how these dastardly conspiracy theorists present a threat to national security “[f]rom the perspective of cognitive warfare,” and proposes countermeasures to address these grave dangers to the nation.

And what “conspiracy theories” does Nagasako cite in her piece? That there exists a “deep state” over and above the surface-level government, that the COVID vaccines were harmful, that the U.S. has conducted biological weapons research in Ukraine in recent years… you know, the usual harebrained ideas that only kooky conspiracy realists would even entertain.

Yes, for those who haven’t received the memo yet: there most certainly is a war for your mind. It certainly is taking place right now. It is being waged by militaries around the world. The target of these wars is, more often than not, these very militaries’ fellow countrymen.

To those who are just waking up to this war, you have my deepest sympathy. Realizing that you are a target in a battle you didn’t even know you were fighting in a “cognitive domain” you never even knew existed must be wildly disorienting, to say the least.

But here’s the bad news: new technologies are being developed that will make all of this history – from Pavlov to Skinner to Mitchell to SPI-B – and all of these secret operations – from MKUltra to MKChickwit – and all of these military campaigns – from Chisholm and Rees and the machinations of the Tavistock minions to the ChiComs and the Japanese and the development of cognitive warfare – seem like small potatoes.

As we shall see in a follow-up article, the technology to rewire the brain – quite literally – is already being tested and deployed. And, once these technologies are ready to be unleashed on the public, they may bring the age-old dream of the dictators for total domination of the human population to reality.

Reprinted with permission from the Corbett Report.

-

Alberta2 days ago

Alberta2 days agoProvince to expand services provided by Alberta Sheriffs: New policing option for municipalities

-

Bruce Dowbiggin2 days ago

Bruce Dowbiggin2 days agoIs HNIC Ready For The Winnipeg Jets To Be Canada’s Heroes?

-

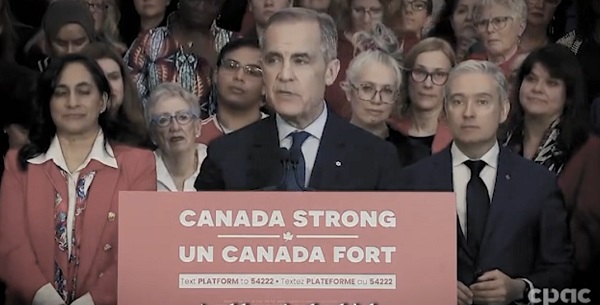

2025 Federal Election2 days ago

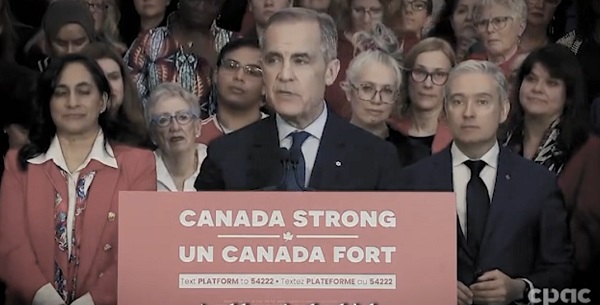

2025 Federal Election2 days agoCSIS Warned Beijing Would Brand Conservatives as Trumpian. Now Carney’s Campaign Is Doing It.

-

2025 Federal Election2 days ago

2025 Federal Election2 days agoNo Matter The Winner – My Canada Is Gone

-

Alberta2 days ago

Alberta2 days agoMade in Alberta! Province makes it easier to support local products with Buy Local program

-

Health2 days ago

Health2 days agoHorrific and Deadly Effects of Antidepressants

-

2025 Federal Election2 days ago

2025 Federal Election2 days agoCampaign 2025 : The Liberal Costed Platform – Taxpayer Funded Fiction

-

2025 Federal Election2 days ago

2025 Federal Election2 days agoA Perfect Storm of Corruption, Foreign Interference, and National Security Failures