Brownstone Institute

They Are Scrubbing the Internet Right Now

From the Brownstone Institute

By

For the first time in 30 years, we have gone a long swath of time – since October 8-10 – since this service has chronicled the life of the Internet in real time.

Instances of censorship are growing to the point of normalization. Despite ongoing litigation and more public attention, mainstream social media has been more ferocious in recent months than ever before. Podcasters know for sure what will be instantly deleted and debate among themselves over content in gray areas. Some like Brownstone have given up on YouTube in favor of Rumble, sacrificing vast audiences if only to see their content survive to see the light of day.

It’s not always about being censored or not. Today’s algorithms include a range of tools that affect searchability and findability. For example, the Joe Rogan interview with Donald Trump racked up an astonishing 34 million views before YouTube and Google tweaked their search engines to make it hard to discover, while even presiding over a technical malfunction that disabled viewing for many people. Faced with this, Rogan went to the platform X to post all three hours.

Navigating this thicket of censorship and quasi-censorship has become part of the business model of alternative media.

Those are just the headline cases. Beneath the headlines, there are technical events taking place that are fundamentally affecting the ability of any historian even to look back and tell what is happening. Incredibly, the service Archive.org which has been around since 1994 has stopped taking images of content on all platforms. For the first time in 30 years, we have gone a long swath of time – since October 8-10 – since this service has chronicled the life of the Internet in real time.

As of this writing, we have no way to verify content that has been posted for three weeks of October leading to the days of the most contentious and consequential election of our lifetimes. Crucially, this is not about partisanship or ideological discrimination. No websites on the Internet are being archived in ways that are available to users. In effect, the whole memory of our main information system is just a big black hole right now.

The trouble on Archive.org began on October 8, 2024, when the service was suddenly hit with a massive Denial of Service attack (DDOS) that not only took down the service but introduced a level of failure that nearly took it out completely. Working around the clock, Archive.org came back as a read-only service where it stands today. However, you can only read content that was posted before the attack. The service has yet to resume any public display of mirroring of any sites on the Internet.

In other words, the only source on the entire World Wide Web that mirrors content in real time has been disabled. For the first time since the invention of the web browser itself, researchers have been robbed of the ability to compare past with future content, an action that is a staple of researchers looking into government and corporate actions.

It was using this service, for example, that enabled Brownstone researchers to discover precisely what the CDC had said about Plexiglas, filtration systems, mail-in ballots, and rental moratoriums. That content was all later scrubbed off the live Internet, so accessing archive copies was the only way we could know and verify what was true. It was the same with the World Health Organization and its disparagement of natural immunity which was later changed. We were able to document the shifting definitions thanks only to this tool which is now disabled.

What this means is the following: Any website can post anything today and take it down tomorrow and leave no record of what they posted unless some user somewhere happened to take a screenshot. Even then there is no way to verify its authenticity. The standard approach to know who said what and when is now gone. That is to say that the whole Internet is already being censored in real time so that during these crucial weeks, when vast swaths of the public fully expect foul play, anyone in the information industry can get away with anything and not get caught.

We know what you are thinking. Surely this DDOS attack was not a coincidence. The time was just too perfect. And maybe that is right. We just do not know. Does Archive.org suspect something along those lines? Here is what they say:

Last week, along with a DDOS attack and exposure of patron email addresses and encrypted passwords, the Internet Archive’s website javascript was defaced, leading us to bring the site down to access and improve our security. The stored data of the Internet Archive is safe and we are working on resuming services safely. This new reality requires heightened attention to cyber security and we are responding. We apologize for the impact of these library services being unavailable.

Deep state? As with all these things, there is no way to know, but the effort to blast away the ability of the Internet to have a verified history fits neatly into the stakeholder model of information distribution that has clearly been prioritized on a global level. The Declaration of the Future of the Internet makes that very clear: the Internet should be “governed through the multi-stakeholder approach, whereby governments and relevant authorities partner with academics, civil society, the private sector, technical community and others.” All of these stakeholders benefit from the ability to act online without leaving a trace.

To be sure, a librarian at Archive.org has written that “While the Wayback Machine has been in read-only mode, web crawling and archiving have continued. Those materials will be available via the Wayback Machine as services are secured.”

When? We do not know. Before the election? In five years? There might be some technical reasons but it might seem that if web crawling is continuing behind the scenes, as the note suggests, that too could be available in read-only mode now. It is not.

Disturbingly, this erasure of Internet memory is happening in more than one place. For many years, Google offered a cached version of the link you were seeking just below the live version. They have plenty of server space to enable that now, but no: that service is now completely gone. In fact, the Google cache service officially ended just a week or two before the Archive.org crash, at the end of September 2024.

Thus the two available tools for searching cached pages on the Internet disappeared within weeks of each other and within weeks of the November 5th election.

Other disturbing trends are also turning Internet search results increasingly into AI-controlled lists of establishment-approved narratives. The web standard used to be for search result rankings to be governed by user behavior, links, citations, and so forth. These were more or less organic metrics, based on an aggregation of data indicating how useful a search result was to Internet users. Put very simply, the more people found a search result useful, the higher it would rank. Google now uses very different metrics to rank search results, including what it considers “trusted sources” and other opaque, subjective determinations.

Furthermore, the most widely used service that once ranked websites based on traffic is now gone. That service was called Alexa. The company that created it was independent. Then one day in 1999, it was bought by Amazon. That seemed encouraging because Amazon was well-heeled. The acquisition seemed to codify the tool that everyone was using as a kind of metric of status on the web. It was common back in the day to take note of an article somewhere on the web and then look it up on Alexa to see its reach. If it was important, one would take notice, but if it was not, no one particularly cared.

This is how an entire generation of web technicians functioned. The system worked as well as one could possibly expect.

Then, in 2014, years after acquiring the ranking service Alexa, Amazon did a strange thing. It released its home assistant (and surveillance device) with the same name. Suddenly, everyone had them in their homes and would find out anything by saying “Hey Alexa.” Something seemed strange about Amazon naming its new product after an unrelated business it had acquired years earlier. No doubt there was some confusion caused by the naming overlap.

Here’s what happened next. In 2022, Amazon actively took down the web ranking tool. It didn’t sell it. It didn’t raise the prices. It didn’t do anything with it. It suddenly made it go completely dark.

No one could figure out why. It was the industry standard, and suddenly it was gone. Not sold, just blasted away. No longer could anyone figure out the traffic-based website rankings of anything without paying very high prices for hard-to-use proprietary products.

All of these data points that might seem unrelated when considered individually, are actually part of a long trajectory that has shifted our information landscape into unrecognizable territory. The Covid events of 2020-2023, with massive global censorship and propaganda efforts, greatly accelerated these trends.

One wonders if anyone will remember what it was once like. The hacking and hobbling of Archive.org underscores the point: there will be no more memory.

As of this writing, fully three weeks of web content have not been archived. What we are missing and what has changed is anyone’s guess. And we have no idea when the service will come back. It is entirely possible that it will not come back, that the only real history to which we can take recourse will be pre-October 8, 2024, the date on which everything changed.

The Internet was founded to be free and democratic. It will require herculean efforts at this point to restore that vision, because something else is quickly replacing it.

Brownstone Institute

The Unmasking of Vaccine Science

From the Brownstone Institute

By

I recently purchased Aaron Siri’s new book Vaccines, Amen. As I flipped though the pages, I noticed a section devoted to his now-famous deposition of Dr Stanley Plotkin, the “godfather” of vaccines.

I’d seen viral clips circulating on social media, but I had never taken the time to read the full transcript — until now.

Siri’s interrogation was methodical and unflinching…a masterclass in extracting uncomfortable truths.

A Legal Showdown

In January 2018, Dr Stanley Plotkin, a towering figure in immunology and co-developer of the rubella vaccine, was deposed under oath in Pennsylvania by attorney Aaron Siri.

The case stemmed from a custody dispute in Michigan, where divorced parents disagreed over whether their daughter should be vaccinated. Plotkin had agreed to testify in support of vaccination on behalf of the father.

What followed over the next nine hours, captured in a 400-page transcript, was extraordinary.

Plotkin’s testimony revealed ethical blind spots, scientific hubris, and a troubling indifference to vaccine safety data.

He mocked religious objectors, defended experiments on mentally disabled children, and dismissed glaring weaknesses in vaccine surveillance systems.

A System Built on Conflicts

From the outset, Plotkin admitted to a web of industry entanglements.

He confirmed receiving payments from Merck, Sanofi, GSK, Pfizer, and several biotech firms. These were not occasional consultancies but long-standing financial relationships with the very manufacturers of the vaccines he promoted.

Plotkin appeared taken aback when Siri questioned his financial windfall from royalties on products like RotaTeq, and expressed surprise at the “tone” of the deposition.

Siri pressed on: “You didn’t anticipate that your financial dealings with those companies would be relevant?”

Plotkin replied: “I guess, no, I did not perceive that that was relevant to my opinion as to whether a child should receive vaccines.”

The man entrusted with shaping national vaccine policy had a direct financial stake in its expansion, yet he brushed it aside as irrelevant.

Contempt for Religious Dissent

Siri questioned Plotkin on his past statements, including one in which he described vaccine critics as “religious zealots who believe that the will of God includes death and disease.”

Siri asked whether he stood by that statement. Plotkin replied emphatically, “I absolutely do.”

Plotkin was not interested in ethical pluralism or accommodating divergent moral frameworks. For him, public health was a war, and religious objectors were the enemy.

He also admitted to using human foetal cells in vaccine production — specifically WI-38, a cell line derived from an aborted foetus at three months’ gestation.

Siri asked if Plotkin had authored papers involving dozens of abortions for tissue collection. Plotkin shrugged: “I don’t remember the exact number…but quite a few.”

Plotkin regarded this as a scientific necessity, though for many people — including Catholics and Orthodox Jews — it remains a profound moral concern.

Rather than acknowledging such sensitivities, Plotkin dismissed them outright, rejecting the idea that faith-based values should influence public health policy.

That kind of absolutism, where scientific aims override moral boundaries, has since drawn criticism from ethicists and public health leaders alike.

As NIH director Jay Bhattacharya later observed during his 2025 Senate confirmation hearing, such absolutism erodes trust.

“In public health, we need to make sure the products of science are ethically acceptable to everybody,” he said. “Having alternatives that are not ethically conflicted with foetal cell lines is not just an ethical issue — it’s a public health issue.”

Safety Assumed, Not Proven

When the discussion turned to safety, Siri asked, “Are you aware of any study that compares vaccinated children to completely unvaccinated children?”

Plotkin replied that he was “not aware of well-controlled studies.”

Asked why no placebo-controlled trials had been conducted on routine childhood vaccines such as hepatitis B, Plotkin said such trials would be “ethically difficult.”

That rationale, Siri noted, creates a scientific blind spot. If trials are deemed too unethical to conduct, then gold-standard safety data — the kind required for other pharmaceuticals — simply do not exist for the full childhood vaccine schedule.

Siri pointed to one example: Merck’s hepatitis B vaccine, administered to newborns. The company had only monitored participants for adverse events for five days after injection.

Plotkin didn’t dispute it. “Five days is certainly short for follow-up,” he admitted, but claimed that “most serious events” would occur within that time frame.

Siri challenged the idea that such a narrow window could capture meaningful safety data — especially when autoimmune or neurodevelopmental effects could take weeks or months to emerge.

Siri pushed on. He asked Plotkin if the DTaP and Tdap vaccines — for diphtheria, tetanus and pertussis — could cause autism.

“I feel confident they do not,” Plotkin replied.

But when shown the Institute of Medicine’s 2011 report, which found the evidence “inadequate to accept or reject” a causal link between DTaP and autism, Plotkin countered, “Yes, but the point is that there were no studies showing that it does cause autism.”

In that moment, Plotkin embraced a fallacy: treating the absence of evidence as evidence of absence.

“You’re making assumptions, Dr Plotkin,” Siri challenged. “It would be a bit premature to make the unequivocal, sweeping statement that vaccines do not cause autism, correct?”

Plotkin relented. “As a scientist, I would say that I do not have evidence one way or the other.”

The MMR

The deposition also exposed the fragile foundations of the measles, mumps, and rubella (MMR) vaccine.

When Siri asked for evidence of randomised, placebo-controlled trials conducted before MMR’s licensing, Plotkin pushed back: “To say that it hasn’t been tested is absolute nonsense,” he said, claiming it had been studied “extensively.”

Pressed to cite a specific trial, Plotkin couldn’t name one. Instead, he gestured to his own 1,800-page textbook: “You can find them in this book, if you wish.”

Siri replied that he wanted an actual peer-reviewed study, not a reference to Plotkin’s own book. “So you’re not willing to provide them?” he asked. “You want us to just take your word for it?”

Plotkin became visibly frustrated.

Eventually, he conceded there wasn’t a single randomised, placebo-controlled trial. “I don’t remember there being a control group for the studies, I’m recalling,” he said.

The exchange foreshadowed a broader shift in public discourse, highlighting long-standing concerns that some combination vaccines were effectively grandfathered into the schedule without adequate safety testing.

In September this year, President Trump called for the MMR vaccine to be broken up into three separate injections.

The proposal echoed a view that Andrew Wakefield had voiced decades earlier — namely, that combining all three viruses into a single shot might pose greater risk than spacing them out.

Wakefield was vilified and struck from the medical register. But now, that same question — once branded as dangerous misinformation — is set to be re-examined by the CDC’s new vaccine advisory committee, chaired by Martin Kulldorff.

The Aluminium Adjuvant Blind Spot

Siri next turned to aluminium adjuvants — the immune-activating agents used in many childhood vaccines.

When asked whether studies had compared animals injected with aluminium to those given saline, Plotkin conceded that research on their safety was limited.

Siri pressed further, asking if aluminium injected into the body could travel to the brain. Plotkin replied, “I have not seen such studies, no, or not read such studies.”

When presented with a series of papers showing that aluminium can migrate to the brain, Plotkin admitted he had not studied the issue himself, acknowledging that there were experiments “suggesting that that is possible.”

Asked whether aluminium might disrupt neurological development in children, Plotkin stated, “I’m not aware that there is evidence that aluminum disrupts the developmental processes in susceptible children.”

Taken together, these exchanges revealed a striking gap in the evidence base.

Compounds such as aluminium hydroxide and aluminium phosphate have been injected into babies for decades, yet no rigorous studies have ever evaluated their neurotoxicity against an inert placebo.

This issue returned to the spotlight in September 2025, when President Trump pledged to remove aluminium from vaccines, and world-leading researcher Dr Christopher Exley renewed calls for its complete reassessment.

A Broken Safety Net

Siri then turned to the reliability of the Vaccine Adverse Event Reporting System (VAERS) — the primary mechanism for collecting reports of vaccine-related injuries in the United States.

Did Plotkin believe most adverse events were captured in this database?

“I think…probably most are reported,” he replied.

But Siri showed him a government-commissioned study by Harvard Pilgrim, which found that fewer than 1% of vaccine adverse events are reported to VAERS.

“Yes,” Plotkin said, backtracking. “I don’t really put much faith into the VAERS system…”

Yet this is the same database officials routinely cite to claim that “vaccines are safe.”

Ironically, Plotkin himself recently co-authored a provocative editorial in the New England Journal of Medicine, conceding that vaccine safety monitoring remains grossly “inadequate.”

Experimenting on the Vulnerable

Perhaps the most chilling part of the deposition concerned Plotkin’s history of human experimentation.

“Have you ever used orphans to study an experimental vaccine?” Siri asked.

“Yes,” Plotkin replied.

“Have you ever used the mentally handicapped to study an experimental vaccine?” Siri asked.

“I don’t recollect…I wouldn’t deny that I may have done so,” Plotkin replied.

Siri cited a study conducted by Plotkin in which he had administered experimental rubella vaccines to institutionalised children who were “mentally retarded.”

Plotkin stated flippantly, “Okay well, in that case…that’s what I did.”

There was no apology, no sign of ethical reflection — just matter-of-fact acceptance.

Siri wasn’t done.

He asked if Plotkin had argued that it was better to test on those “who are human in form but not in social potential” rather than on healthy children.

Plotkin admitted to writing it.

Siri established that Plotkin had also conducted vaccine research on the babies of imprisoned mothers, and on colonised African populations.

Plotkin appeared to suggest that the scientific value of such studies outweighed the ethical lapses—an attitude that many would interpret as the classic ‘ends justify the means’ rationale.

But that logic fails the most basic test of informed consent. Siri asked whether consent had been obtained in these cases.

“I don’t remember…but I assume it was,” Plotkin said.

Assume?

This was post-Nuremberg research. And the leading vaccine developer in America couldn’t say for sure whether he had properly informed the people he experimented on.

In any other field of medicine, such lapses would be disqualifying.

A Casual Dismissal of Parental Rights

Plotkin’s indifference to experimenting on disabled children didn’t stop there.

Siri asked whether someone who declined a vaccine due to concerns about missing safety data should be labelled “anti-vax.”

Plotkin replied, “If they refused to be vaccinated themselves or refused to have their children vaccinated, I would call them an anti-vaccination person, yes.”

Plotkin was less concerned about adults making that choice for themselves, but he had no tolerance for parents making those choices for their own children.

“The situation for children is quite different,” said Plotkin, “because one is making a decision for somebody else and also making a decision that has important implications for public health.”

In Plotkin’s view, the state held greater authority than parents over a child’s medical decisions — even when the science was uncertain.

The Enabling of Figures Like Plotkin

The Plotkin deposition stands as a case study in how conflicts of interest, ideology, and deference to authority have corroded the scientific foundations of public health.

Plotkin is no fringe figure. He is celebrated, honoured, and revered. Yet he promotes vaccines that have never undergone true placebo-controlled testing, shrugs off the failures of post-market surveillance, and admits to experimenting on vulnerable populations.

This is not conjecture or conspiracy — it is sworn testimony from the man who helped build the modern vaccine program.

Now, as Health Secretary Robert F. Kennedy, Jr. reopens long-dismissed questions about aluminium adjuvants and the absence of long-term safety studies, Plotkin’s once-untouchable legacy is beginning to fray.

Republished from the author’s Substack

Brownstone Institute

Bizarre Decisions about Nicotine Pouches Lead to the Wrong Products on Shelves

From the Brownstone Institute

A walk through a dozen convenience stores in Montgomery County, Pennsylvania, says a lot about how US nicotine policy actually works. Only about one in eight nicotine-pouch products for sale is legal. The rest are unauthorized—but they’re not all the same. Some are brightly branded, with uncertain ingredients, not approved by any Western regulator, and clearly aimed at impulse buyers. Others—like Sweden’s NOAT—are the opposite: muted, well-made, adult-oriented, and already approved for sale in Europe.

Yet in the United States, NOAT has been told to stop selling. In September 2025, the Food and Drug Administration (FDA) issued the company a warning letter for offering nicotine pouches without marketing authorization. That might make sense if the products were dangerous, but they appear to be among the safest on the market: mild flavors, low nicotine levels, and recyclable paper packaging. In Europe, regulators consider them acceptable. In America, they’re banned. The decision looks, at best, strange—and possibly arbitrary.

What the Market Shows

My October 2025 audit was straightforward. I visited twelve stores and recorded every distinct pouch product visible for sale at the counter. If the item matched one of the twenty ZYN products that the FDA authorized in January, it was counted as legal. Everything else was counted as illegal.

Two of the stores told me they had recently received FDA letters and had already removed most illegal stock. The other ten stores were still dominated by unauthorized products—more than 93 percent of what was on display. Across all twelve locations, about 12 percent of products were legal ZYN, and about 88 percent were not.

The illegal share wasn’t uniform. Many of the unauthorized products were clearly high-nicotine imports with flashy names like Loop, Velo, and Zimo. These products may be fine, but some are probably high in contaminants, and a few often with very high nicotine levels. Others were subdued, plainly meant for adult users. NOAT was a good example of that second group: simple packaging, oat-based filler, restrained flavoring, and branding that makes no effort to look “cool.” It’s the kind of product any regulator serious about harm reduction would welcome.

Enforcement Works

To the FDA’s credit, enforcement does make a difference. The two stores that received official letters quickly pulled their illegal stock. That mirrors the agency’s broader efforts this year: new import alerts to detain unauthorized tobacco products at the border (see also Import Alert 98-06), and hundreds of warning letters to retailers, importers, and distributors.

But effective enforcement can’t solve a supply problem. The list of legal nicotine-pouch products is still extremely short—only a narrow range of ZYN items. Adults who want more variety, or stores that want to meet that demand, inevitably turn to gray-market suppliers. The more limited the legal catalog, the more the illegal market thrives.

Why the NOAT Decision Appears Bizarre

The FDA’s own actions make the situation hard to explain. In January 2025, it authorized twenty ZYN products after finding that they contained far fewer harmful chemicals than cigarettes and could help adult smokers switch. That was progress. But nine months later, the FDA has approved nothing else—while sending a warning letter to NOAT, arguably the least youth-oriented pouch line in the world.

The outcome is bad for legal sellers and public health. ZYN is legal; a handful of clearly risky, high-nicotine imports continue to circulate; and a mild, adult-market brand that meets European safety and labeling rules is banned. Officially, NOAT’s problem is procedural—it lacks a marketing order. But in practical terms, the FDA is punishing the very design choices it claims to value: simplicity, low appeal to minors, and clean ingredients.

This approach also ignores the differences in actual risk. Studies consistently show that nicotine pouches have far fewer toxins than cigarettes and far less variability than many vapes. The biggest pouch concerns are uneven nicotine levels and occasional traces of tobacco-specific nitrosamines, depending on manufacturing quality. The serious contamination issues—heavy metals and inconsistent dosage—belong mostly to disposable vapes, particularly the flood of unregulated imports from China. Treating all “unauthorized” products as equally bad blurs those distinctions and undermines proportional enforcement.

A Better Balance: Enforce Upstream, Widen the Legal Path

My small Montgomery County survey suggests a simple formula for improvement.

First, keep enforcement targeted and focused on suppliers, not just clerks. Warning letters clearly change behavior at the store level, but the biggest impact will come from auditing distributors and importers, and stopping bad shipments before they reach retail shelves.

Second, make compliance easy. A single-page list of authorized nicotine-pouch products—currently the twenty approved ZYN items—should be posted in every store and attached to distributor invoices. Point-of-sale systems can block barcodes for anything not on the list, and retailers could affirm, once a year, that they stock only approved items.

Third, widen the legal lane. The FDA launched a pilot program in September 2025 to speed review of new pouch applications. That program should spell out exactly what evidence is needed—chemical data, toxicology, nicotine release rates, and behavioral studies—and make timely decisions. If products like NOAT meet those standards, they should be authorized quickly. Legal competition among adult-oriented brands will crowd out the sketchy imports far faster than enforcement alone.

The Bottom Line

Enforcement matters, and the data show it works—where it happens. But the legal market is too narrow to protect consumers or encourage innovation. The current regime leaves a few ZYN products as lonely legal islands in a sea of gray-market pouches that range from sensible to reckless.

The FDA’s treatment of NOAT stands out as a case study in inconsistency: a quiet, adult-focused brand approved in Europe yet effectively banned in the US, while flashier and riskier options continue to slip through. That’s not a public-health victory; it’s a missed opportunity.

If the goal is to help adult smokers move to lower-risk products while keeping youth use low, the path forward is clear: enforce smartly, make compliance easy, and give good products a fair shot. Right now, we’re doing the first part well—but failing at the second and third. It’s time to fix that.

-

Censorship Industrial Complex10 hours ago

Censorship Industrial Complex10 hours agoUS Under Secretary of State Slams UK and EU Over Online Speech Regulation, Announces Release of Files on Past Censorship Efforts

-

Business13 hours ago

Business13 hours ago“Magnitude cannot be overstated”: Minnesota aid scam may reach $9 billion

-

Alberta2 days ago

Alberta2 days agoAlberta project would be “the biggest carbon capture and storage project in the world”

-

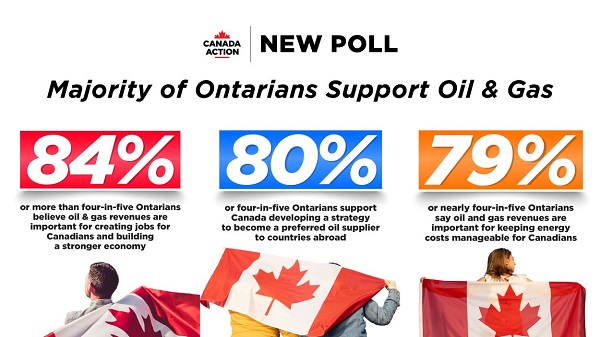

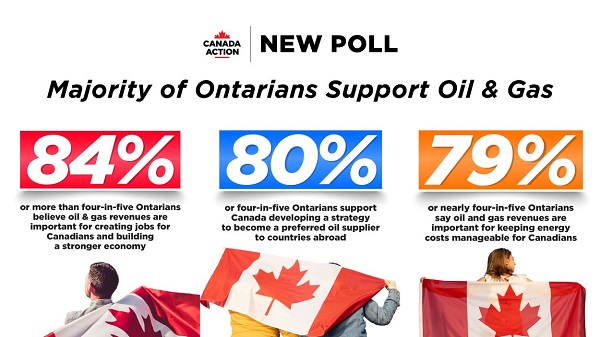

Energy2 days ago

Energy2 days agoNew Poll Shows Ontarians See Oil & Gas as Key to Jobs, Economy, and Trade

-

Daily Caller1 day ago

Daily Caller1 day agoIs Ukraine Peace Deal Doomed Before Zelenskyy And Trump Even Meet At Mar-A-Lago?

-

Alberta2 days ago

Alberta2 days agoAlberta Next Panel calls for less Ottawa—and it could pay off

-

Energy2 days ago

Energy2 days agoWhile Western Nations Cling to Energy Transition, Pragmatic Nations Produce Energy and Wealth

-

Business12 hours ago

Business12 hours agoLargest fraud in US history? Independent Journalist visits numerous daycare centres with no children, revealing massive scam