Artificial Intelligence

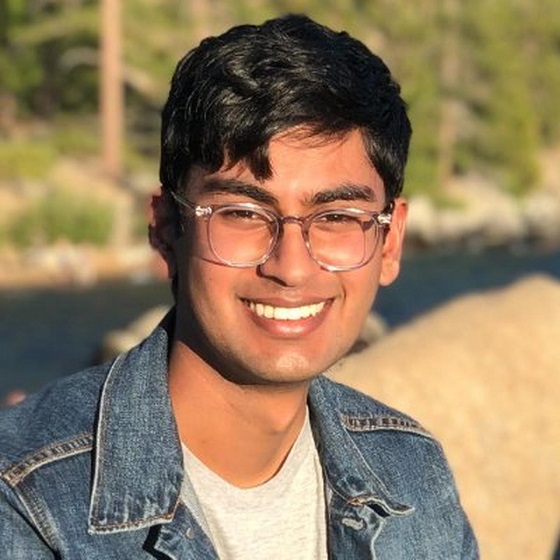

Death of an Open A.I. Whistleblower

By John Leake

Suchir Balaji was trying to warn the world of the dangers of Open A.I. when he was found dead in his apartment. His story suggests that San Francisco has become an open sewer of corruption.

According to Wikipedia:

Suchir Balaji (1998 – November 26, 2024) was an artificial intelligence researcher and former employee of OpenAI, where he worked from 2020 until 2024. He gained attention for his whistleblowing activities related to artificial intelligence ethics and the inner workings of OpenAI.

Balaji was found dead in his home on November 26, 2024. San Francisco authorities determined the death was a suicide, though Balaji’s parents have disputed the verdict.

Balaji’s mother just gave an extraordinary interview with Tucker Carlson that is well worth watching.

A quick Google search of Mr. Serrano Sewell resulted in a Feb. 8, 2024 report in the San Francisco Standard headlined San Francisco official likely tossed out human skull, lawsuit says. According to the report:

The disappearance of a human skull has spurred a lawsuit against the top administrator of San Francisco’s medical examiner’s office from an employee who alleges she faced retaliation for reporting the missing body part.

Sonia Kominek-Adachi alleges in a lawsuit filed Monday that she was terminated from her job as a death investigator after finding that the executive director of the office, David Serrano Sewell, may have “inexplicably” tossed the skull while rushing to clean up the office ahead of an inspection.

Kominek-Adachi made the discovery in January 2023 while doing an inventory of body parts held by the office, her lawsuit says. Her efforts to raise an alarm around the missing skull allegedly led up to her firing last October.

If the allegations of this lawsuit are true, they suggest that Mr. Serrano is an unscrupulous and vindictive man. According to the SF Gov website:

Serrano Sewell joined the OCME with over 16 years of experience developing management structures, building consensus, and achieving policy improvements in the public, nonprofit, and private sectors. He previously served as a Mayor’s aide, Deputy City Attorney, and a policy advocate for public and nonprofit hospitals.

In other words, he is an old denizen of the San Francisco city machine. If a mafia-like organization has penetrated the city administration, it would be well-served by having a key player run the medical examiner’s office.

According to Balaji’s mother, Poornima Ramarao, his death was an obvious murder that was crudely staged to look like a suicide. The responding police officers only spent forty minutes examining the scene, and then left the body in the apartment to be retrieved by medical examiner field agents the next day. If true, this was an act of breathtaking negligence.

I have written a book about two murders that were staged to look like suicides, and to me, Mrs. Ramarao’s story sounds highly credible. Balaji kept a pistol in his apartment for self defense because he felt that his life was possibly in danger. He was found shot in the head with this pistol, which was purportedly found in his hand. If his death was indeed a murder staged to look like a suicide, it raises the suspicion that the assailant knew that Balaji possessed this pistol and where he kept it in his apartment.

Balaji was found with a gunshot wound to his head—fired from above, the bullet apparently traversing downward through his face and missing his brain. However, he had also sustained what—based on his mother’s testimony—sounds like a blunt force injury on the left side of the head, suggesting a right-handed assailant initially struck him with a blunt instrument that may have knocked him unconscious or stunned him. The gunshot was apparently inflicted after the attack with the blunt instrument.

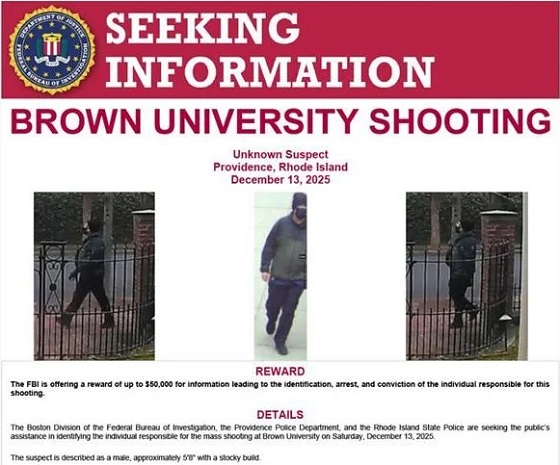

A fragment of a bloodstained whig found in the apartment suggests the assailant wore a whig in order to disguise himself in the event he was caught in a surveillance camera placed in the building’s main entrance. No surveillance camera was positioned over the entrance to Balaji’s apartment.

How did the assailant enter Balaji’s apartment? Did Balaji know the assailant and let him in? Alternatively, did the assailant somehow—perhaps through a contact in the building’s management—obtain a key to the apartment?

All of these questions could probably be easily answered with a proper investigation, but it sounds like the responding officers hastily concluded it was a suicide, and the medical examiner’s office hastily confirmed their initial perception. If good crime scene photographs could be obtained, a decent bloodstain pattern analyst could probably reconstruct what happened to Balaji.

Vernon J. Geberth, a retired Lieutenant-Commander of the New York City Police Department, has written extensively about how homicides are often erroneously perceived to be suicides by responding officers. The initial perception of suicide at a death scene often results in a lack of proper analysis. His essay The Seven Major Mistakes in Suicide Investigation should be required reading of every police officer whose job includes examining the scenes of unattended deaths.

However, judging by his mother’s testimony, Suchir Balaji’s death was obviously a murder staged to look like a suicide. Someone in a position of power decided it was best to perform only the most cursory investigation and to rule the manner of death suicide based on the mere fact that the pistol was purportedly found in the victim’s hand.

Readers who are interested in learning more about this kind of crime will find it interesting to watch my documentary film in which I examine two murders that were staged to look like suicides. Incidentally, the film is now showing in the Hollywood North International Film Festival. Please click on the image below to watch the film.

If you don’t have a full forty minutes to spare to watch the entire picture, please consider devoting just one second of your time to click on the vote button. Many thanks!

Subscribe to Courageous Discourse™ with Dr. Peter McCullough & John Leake.

For the full experience, upgrade your subscription.

Artificial Intelligence

UK Police Pilot AI System to Track “Suspicious” Driver Journeys

AI-driven surveillance is shifting from spotting suspects to mapping ordinary life, turning everyday travel into a stream of behavioral data

|

|

Alberta

Schools should go back to basics to mitigate effects of AI

From the Fraser Institute

Odds are, you can’t tell whether this sentence was written by AI. Schools across Canada face the same problem. And happily, some are finding simple solutions.

Manitoba’s Division Scolaire Franco-Manitobaine recently issued new guidelines for teachers, to only assign optional homework and reading in grades Kindergarten to six, and limit homework in grades seven to 12. The reason? The proliferation of generative artificial intelligence (AI) chatbots such as ChatGPT make it very difficult for teachers, juggling a heavy workload, to discern genuine student work from AI-generated text. In fact, according to Division superintendent Alain Laberge, “Most of the [after-school assignment] submissions, we find, are coming from AI, to be quite honest.”

This problem isn’t limited to Manitoba, of course.

Two provincial doors down, in Alberta, new data analysis revealed that high school report card grades are rising while scores on provincewide assessments are not—particularly since 2022, the year ChatGPT was released. Report cards account for take-home work, while standardized tests are written in person, in the presence of teaching staff.

Specifically, from 2016 to 2019, the average standardized test score in Alberta across a range of subjects was 64 while the report card grade was 73.3—or 9.3 percentage points higher). From 2022 and 2024, the gap increased to 12.5 percentage points. (Data for 2020 and 2021 are unavailable due to COVID school closures.)

In lieu of take-home work, the Division Scolaire Franco-Manitobaine recommends nightly reading for students, which is a great idea. Having students read nightly doesn’t cost schools a dime but it’s strongly associated with improving academic outcomes.

According to a Programme for International Student Assessment (PISA) analysis of 174,000 student scores across 32 countries, the connection between daily reading and literacy was “moderately strong and meaningful,” and reading engagement affects reading achievement more than the socioeconomic status, gender or family structure of students.

All of this points to an undeniable shift in education—that is, teachers are losing a once-valuable tool (homework) and shifting more work back into the classroom. And while new technologies will continue to change the education landscape in heretofore unknown ways, one time-tested winning strategy is to go back to basics.

And some of “the basics” have slipped rapidly away. Some college students in elite universities arrive on campus never having read an entire book. Many university professors bemoan the newfound inability of students to write essays or deconstruct basic story components. Canada’s average PISA scores—a test of 15-year-olds in math, reading and science—have plummeted. In math, student test scores have dropped 35 points—the PISA equivalent of nearly two years of lost learning—in the last two decades. In reading, students have fallen about one year behind while science scores dropped moderately.

The decline in Canadian student achievement predates the widespread access of generative AI, but AI complicates the problem. Again, the solution needn’t be costly or complicated. There’s a reason why many tech CEOs famously send their children to screen-free schools. If technology is too tempting, in or outside of class, students should write with a pencil and paper. If ChatGPT is too hard to detect (and we know it is, because even AI often can’t accurately detect AI), in-class essays and assignments make sense.

And crucially, standardized tests provide the most reliable equitable measure of student progress, and if properly monitored, they’re AI-proof. Yet standardized testing is on the wane in Canada, thanks to long-standing attacks from teacher unions and other opponents, and despite broad support from parents. Now more than ever, parents and educators require reliable data to access the ability of students. Standardized testing varies widely among the provinces, but parents in every province should demand a strong standardized testing regime.

AI may be here to stay and it may play a large role in the future of education. But if schools deprive students of the ability to read books, structure clear sentences, correspond organically with other humans and complete their own work, they will do students no favours. The best way to ensure kids are “future ready”—to borrow a phrase oft-used to justify seesawing educational tech trends—is to school them in the basics.

-

International2 days ago

International2 days agoCommunist China arrests hundreds of Christians just days before Christmas

-

International2 days ago

International2 days agoGeorgia county admits illegally certifying 315k ballots in 2020 presidential election

-

Business2 days ago

Business2 days agoSome Of The Wackiest Things Featured In Rand Paul’s New Report Alleging $1,639,135,969,608 In Gov’t Waste

-

Energy2 days ago

Energy2 days ago‘The electric story is over’

-

Alberta1 day ago

Alberta1 day agoOttawa-Alberta agreement may produce oligopoly in the oilsands

-

Energy1 day ago

Energy1 day agoWestern Canada’s supply chain for Santa Claus

-

International1 day ago

International1 day ago$2.6 million raised for man who wrestled shotgun from Bondi Beach terrorist

-

Alberta2 days ago

Alberta2 days agoCalgary’s new city council votes to ban foreign flags at government buildings