Censorship Industrial Complex

World Economic Forum lists ‘disinformation’ and ‘climate change’ as most severe threats in 2024

From LifeSiteNews

By Joe Kovacs

The World Economic Forum’s Global Risks Report 2024 says the world is ‘plagued by a duo of dangerous crises: climate and conflict,’ which are ‘set against a backdrop of rapidly accelerating technological change and economic uncertainty.’

The World Economic Forum, the group of global elites whom those on the political right love to hate, has just issued its report on the biggest threats in 2024 and beyond.

And at the top of its list of risks is not the climate, at least not immediately.

The WEF, based in Davos, Switzerland, says the biggest short-term risk stems from fake news.

“While climate-related risks remain a dominant theme, the threat from misinformation and disinformation is identified as the most severe short-term threat in the 2024 report,” the group indicated.

“The cascading shocks that have beset the world in recent years are proving intractable. War and conflict, polarized politics, a continuing cost-of-living crisis and the ever-increasing impacts of a changing climate are destabilizing the global order.”

“The report reveals a world ‘plagued by a duo of dangerous crises: climate and conflict.’ These threats are set against a backdrop of rapidly accelerating technological change and economic uncertainty.”

The globalists say “the growing concern about misinformation and disinformation is in large part driven by the potential for AI, in the hands of bad actors, to flood global information systems with false narratives.”

The report states that over the next two years, “foreign and domestic actors alike will leverage misinformation and disinformation to widen societal and political divides.”

It indicates the threat is enhanced by large elections with more than 3 billion people heading to the polls in 2024 and 2025 in the U.S., Britain, and India.

The report suggests the spread of mis- and disinformation could result in civil unrest, but could also drive government-driven censorship, domestic propaganda and controls on the free flow of information.

Rounding out the top ten risks for the next two years are: extreme weather events, societal polarization, cyber insecurity, interstate armed conflict, lack of economic opportunity, inflation, involuntary migration, economic downturn and pollution.

The ten-year list of risks puts extreme weather events at No. 1, followed by critical change to Earth systems, biodiversity loss and economic collapse, natural resource shortages, misinformation and disinformation, adverse outcomes of AI technologies, involuntary migration, cyber insecurity, societal polarization and pollution.

Reprinted with permission from the WND News Center.

Business

‘Great Reset’ champion Klaus Schwab resigns from WEF

From LifeSiteNews

Schwab’s World Economic Forum became a globalist hub for population control, radical climate agenda, and transhuman ideology under his decades-long leadership.

Klaus Schwab, founder of the World Economic Forum and the face of the NGO’s elitist annual get-together in Davos, Switzerland, has resigned as chair of WEF.

Over the decades, but especially over the past several years, the WEF’s Davos annual symposium has become a lightning rod for conservative criticism due to the agendas being pushed there by the elites. As the Associated Press noted:

Widely regarded as a cheerleader for globalization, the WEF’s Davos gathering has in recent years drawn criticism from opponents on both left and right as an elitist talking shop detached from lives of ordinary people.

While WEF itself had no formal power, the annual Davos meeting brought together many of the world’s wealthiest and most influential figures, contributing to Schwab’s personal worth and influence.

Schwab’s resignation on April 20 was announced by the Geneva-based WEF on April 21, but did not indicate why the 88-year-old was resigning. “Following my recent announcement, and as I enter my 88th year, I have decided to step down from the position of Chair and as a member of the Board of Trustees, with immediate effect,” Schwab said in a brief statement. He gave no indication of what he plans to do next.

Schwab founded the World Economic Forum – originally the European Management Forum – in 1971, and its initial mission was to assist European business leaders in competing with American business and to learn from U.S. models and innovation. However, the mission soon expanded to the development of a global economic agenda.

Schwab detailed his own agenda in several books, including The Fourth Industrial Revolution (2016), in which he described the rise of a new industrial era in which technologies such artificial intelligence, gene editing, and advanced robotics would blur the lines between the digital, physical, and biological worlds. Schwab wrote:

We stand on the brink of a technological revolution that will fundamentally alter the way we live, work, and relate to one another. In its scale, scope, and complexity, the transformation will be unlike anything humankind has experienced before. We do not yet know just how it will unfold, but one thing is clear: the response to it must be integrated and comprehensive, involving all stakeholders of the global polity, from the public and private sectors to academia and civil society …

The Fourth Industrial Revolution, finally, will change not only what we do but also who we are. It will affect our identity and all the issues associated with it: our sense of privacy, our notions of ownership, our consumption patterns, the time we devote to work and leisure, and how we develop our careers, cultivate our skills, meet people, and nurture relationships. It is already changing our health and leading to a “quantified” self, and sooner than we think it may lead to human augmentation.

How? Microchips implanted into humans, for one. Schwab was a tech optimist who appeared to heartily welcome transhumanism; in a 2016 interview with France 24 discussing his book, he stated:

And then you have the microchip, which will be implanted, probably within the next ten years, first to open your car, your home, or to do your passport, your payments, and then it will be in your body to monitor your health.

In 2020, mere months into the pandemic, Schwab published COVID-19: The Great Reset, in which he detailed his view of the opportunity presented by the growing global crisis. According to Schwab, the crisis was an opportunity for a global reset that included “stakeholder capitalism,” in which corporations could integrate social and environmental goals into their operations, especially working toward “net-zero emissions” and a massive transition to green energy, and “harnessing” the Fourth Industrial Revolution, including artificial intelligence and automation.

Much of Schwab’s personal wealth came from running the World Economic Forum; as chairman, he earned an annual salary of 1 million Swiss francs (approximately $1 million USD), and the WEF was supported financially through membership fees from over 1,000 companies worldwide as well as significant contributions from organizations such as the Bill & Melinda Gates Foundation. Vice Chairman Peter Brabeck-Letmathe is now serving as interim chairman until his replacement has been selected.

Business

Ted Cruz, Jim Jordan Ramp Up Pressure On Google Parent Company To Deal With ‘Censorship’

From the Daily Caller News Foundation

By Andi Shae Napier

Republican Texas Sen. Ted Cruz and Republican Ohio Rep. Jim Jordan are turning their attention to Google over concerns that the tech giant is censoring users and infringing on Americans’ free speech rights.

Google’s parent company Alphabet, which also owns YouTube, appears to be the GOP’s next Big Tech target. Lawmakers seem to be turning their attention to Alphabet after Mark Zuckerberg’s Meta ended its controversial fact-checking program in favor of a Community Notes system similar to the one used by Elon Musk’s X.

Cruz recently informed reporters of his and fellow senators’ plans to protect free speech.

Dear Readers:

As a nonprofit, we are dependent on the generosity of our readers.

Please consider making a small donation of any amount here. Thank you!

“Stopping online censorship is a major priority for the Commerce Committee,” Cruz said, as reported by Politico. “And we are going to utilize every point of leverage we have to protect free speech online.”

Following his meeting with Alphabet CEO Sundar Pichai last month, Cruz told the outlet, “Big Tech censorship was the single most important topic.”

Jordan, Chairman of the House Judiciary Committee, sent subpoenas to Alphabet and other tech giants such as Rumble, TikTok and Apple in February regarding “compliance with foreign censorship laws, regulations, judicial orders, or other government-initiated efforts” with the intent to discover how foreign governments, or the Biden administration, have limited Americans’ access to free speech.

“Throughout the previous Congress, the Committee expressed concern over YouTube’s censorship of conservatives and political speech,” Jordan wrote in a letter to Pichai in March. “To develop effective legislation, such as the possible enactment of new statutory limits on the executive branch’s ability to work with Big Tech to restrict the circulation of content and deplatform users, the Committee must first understand how and to what extent the executive branch coerced and colluded with companies and other intermediaries to censor speech.”

Jordan subpoenaed tech CEOs in 2023 as well, including Satya Nadella of Microsoft, Tim Cook of Apple and Pichai, among others.

Despite the recent action against the tech giant, the battle stretches back to President Donald Trump’s first administration. Cruz began his investigation of Google in 2019 when he questioned Karan Bhatia, the company’s Vice President for Government Affairs & Public Policy at the time, in a Senate Judiciary Committee hearing. Cruz brought forth a presentation suggesting tech companies, including Google, were straying from free speech and leaning towards censorship.

Even during Congress’ recess, pressure on Google continues to mount as a federal court ruled Thursday that Google’s ad-tech unit violates U.S. antitrust laws and creates an illegal monopoly. This marks the second antitrust ruling against the tech giant as a different court ruled in 2024 that Google abused its dominance of the online search market.

-

2025 Federal Election15 hours ago

2025 Federal Election15 hours agoBREAKING: THE FEDERAL BRIEF THAT SHOULD SINK CARNEY

-

2025 Federal Election15 hours ago

2025 Federal Election15 hours agoCHINESE ELECTION THREAT WARNING: Conservative Candidate Joe Tay Paused Public Campaign

-

2025 Federal Election1 day ago

2025 Federal Election1 day agoOttawa Confirms China interfering with 2025 federal election: Beijing Seeks to Block Joe Tay’s Election

-

2025 Federal Election1 day ago

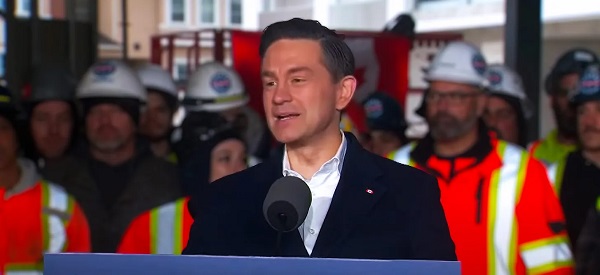

2025 Federal Election1 day agoReal Homes vs. Modular Shoeboxes: The Housing Battle Between Poilievre and Carney

-

2025 Federal Election2 days ago

2025 Federal Election2 days agoCarney’s budget means more debt than Trudeau’s

-

International2 days ago

International2 days agoPope Francis has died aged 88

-

Business2 days ago

Business2 days agoCanada Urgently Needs A Watchdog For Government Waste

-

2025 Federal Election1 day ago

2025 Federal Election1 day agoHow Canada’s Mainstream Media Lost the Public Trust