Brownstone Institute

Why Is Our Education System Failing to Educate?

From the Brownstone Institute

BY

I suspect many of you know my story. But, for those who don’t, the short version is that I taught philosophy — ethics and ancient philosophy, in particular — at Western University in Canada until September 2021 when I was very publicly terminated “with cause” for refusing to comply with Western’s COVID-19 policy.

What I did — question, critically evaluate and, ultimately, challenge what we now call “the narrative” — is risky behaviour. It got me fired, labeled an “academic pariah,” chastised by mainstream media, and vilified by my peers. But this ostracization and vilification, it turns out, was just a symptom of a shift towards a culture of silence, nihilism, and mental atrophy that had been brewing for a long time.

You know that parental rhetorical question, “So if everyone jumped off a cliff, would you do it too?” It turns out that most would jump at the rate of about 90 percent and that most of the 90 percent wouldn’t ask any questions about the height of the cliff, alternative options, accommodations for the injured, etc. What was supposed to be a cautionary rhetorical joke has become the modus operandi of the Western world.

Admittedly, I am a bit of an odd choice as the keynote speaker for an education conference. I have no specialized training in the philosophy of education or in pedagogy. In graduate school, you receive little formal instruction about how to teach. You learn by experience, research, trial by fire, and by error. And, of course, I was terminated from my position as a university teacher. But I do think a lot about education. I look at how many people are willing to outsource their thinking and I wonder, what went wrong? Confronted with the products of our public school system every day for 20 years, I wonder what went wrong? And, finally, as the mother of a 2-year-old, I think a lot about what happens in the early years to encourage a better outcome than we are seeing today.

My aim today is to talk a bit about what I saw in university students during my teaching career, why I think the education system failed them, and the only two basic skills any student at any age really needs.

Let’s start by doing something I used to do regularly in class, something some students loved and others hated. Let’s brainstorm some answers to this question: What does it mean to “be educated?”

[Answers from the audience included: “to acquire knowledge,” “to learn the truth,” “to develop a set of required skills,” “to get a degree.”]

Many answers were admirable but I noticed that most describe education passively: “to be educated,” “to get a degree,” “to be informed” are all passive verbs.

When it comes to writing, we are often told to use the active voice. It is clearer, more emphatic, and creates greater emotional impact. And yet the predominant way we describe education is passive. But is education really a passive experience? Is it something that just happens to us like getting rained on or being scratched by a cat? And do you need to be acted on by someone else in order to become educated? Or is education a more active, personal, emphatic and impactful experience? Might “I am educating,” “I am learning” be more accurate descriptions?

My experience in the classroom was certainly consistent with thinking of education as a passive experience. Over the years, I saw an increasing trend towards timidity, conformity and apathy, all signs of educational passivity. But this was a strict departure from the university culture that met me as an undergraduate in the mid-90s.

As an undergraduate, my classes were robust theaters of The Paper Chase-style effervescent debate. But there was a palpable shift sometime in the late 90s. A hush fell over the classroom. Topics once relied on to ignite discussion — abortion, slavery, capital punishment — no longer held the same appeal. Fewer and fewer hands went up. Students trembled at the thought of being called on and, when they did speak, they parroted a set of ‘safe’ ideas and frequently used “of course” to refer to ideas that would allow them to safely navigate the Scylla and Charybdis of topics considered to be off-limits by the woke zealots.

The stakes are even higher now. Students who question or refuse to comply are rejected or de-enrolled. Recently, an Ontario university student was suspended for asking for a definition of “colonialism.” Merely asking for clarification in the 21st century is academic heresy. Professors like myself are punished or terminated for speaking out, and our universities are becoming increasingly closed systems in which autonomous thought is a threat to the neoliberal groupthink model of ‘education.’

I spent some time thinking in concrete terms about the traits I saw in the novel, 21st century student. With some exception, most students suffer from the following symptoms of our educational failure. They are (for the most part):

- “Information-focused,” not “wisdom-interested:” they are computational, able to input and output information (more or less), but lack the critical ability to understand why they are doing so or to manipulate the data in unique ways.

- Science and technology worshipping: they treat STEM (science, technology, engineering and mathematics) as a god, as an end in itself rather than an instrument to achieve some end.

- Intolerant of uncertainty, complications, gray areas, open questions, and they are generally unable to formulate questions themselves.

- Apathetic, unhappy, even miserable (and I’m not sure they ever felt otherwise so they may not recognize these states for what they are).

- Increasingly unable to engage in counterfactual thinking. (I will return to this idea in a moment.)

- Instrumentalist: everything they do is for the sake of something else.

To elaborate on this last point, when I used to ask my students why they were at university, the following sort of conversation would usually ensue:

Why did you come to university?

To get a degree.

Why?

So I can get into law school (nursing or some other impressive post-graduate program).

Why?

So I can get a good job.

Why?

The well of reflex answers typically started to dry up that point. Some were honest that the lure of a “good job” was to attain money or a certain social status; others seemed genuinely perplexed by the question or would simply say: “My parents tell me I should,” “My friends are all doing it,” or “Society expects it.”

Being an instrumentalist about education means that you see it as valuable only as a way to obtain some further, non-educational good. Again, the passivity is palpable. In this view, education is something that gets poured into you. Once you get enough poured in, it’s time to graduate and unlock the door to the next life prize. But this makes education, for its own sake, meaningless and substitutable. Why not just buy the subject-specific microchip when it becomes available and avoid all the unpleasant studying, questioning, self-reflection, and skill-building?

Time has shown us where this instrumentalism has gotten us: we live in an era of pseudo-intellectuals, pseudo-students and pseudo-education, each of us becoming increasingly less clear why we need education (of the sort offered by our institutions) , or how it’s helping to create a better world.

Why the change? How did intellectual curiosity and critical thinking get trained out of our universities? It’s complex but there are three factors that surely contributed:

- Universities became businesses. They became corporate entities with boards of governors, customers and ad campaigns. In early 2021, Huron College (where I worked) appointed its first board of governors with members from Rogers, Sobeys, and EllisDon, a move author Christopher Newfield calls the “great mistake.” Regulatory capture (of the sort that led the University of Toronto to partner with Moderna) is just one consequence of this collusion.

- Education became a commodity. Education is treated as a purchasable, exchangeable good, which fits well with the idea that education is something that can be downloaded to anyone’s empty mind. There is an implicit assumption of equality and mediocrity, here; you must believe that every student is roughly the same in skill, aptitude, interest, etc. to be able to be filled this way.

- We mistook information for wisdom. Our inheritance from the Enlightenment, the idea that reason will allow us to conquer all, has morphed into information ownership and control. We need to appear informed to seem educated, and we shun the uninformed or misinformed. We align with the most acceptable source of information and forego any critical assessment of how they attained that information. But this isn’t wisdom. Wisdom goes beyond information; it pivots on a sense of care, attention, and context, allowing us to sift through a barrage of information, selecting and acting only on the truly worthy.

This is a radical departure from the earliest universities, which began in the 4th century BC: Plato teaching in the grove of Academus, Epicurus in his private garden. When they met to discuss, there were no corporate partnerships, no boards of directors. They were drawn together by a shared love of questioning and problem-solving.

Out of these early universities was born the concept of liberal arts — grammar, logic, rhetoric, arithmetic, geometry, music and astronomy — studies which are “liberal” not because they are easy or unserious, but because they are suitable for those who are free (liberalis), as opposed to slaves or animals. In the era before SME’s (subject matter experts), these are the subjects thought to be essential preparation for becoming a good, well-informed citizen who is an effective participant in public life.

In this view, education is not something you receive and certainly not something you buy; it is a disposition, a way of life you create for yourself grounded in what Dewey called “skilled powers of thinking.” It helps you to become questioning, critical, curious, creative, humble and, ideally, wise.

The Lost Art of Counterfactual Thinking

I said earlier that I would return to the subject of counterfactual thinking, what it is, why it’s been lost and why it’s important. And I would like to start with another thought experiment: close your eyes and think about one thing that might have been different over the last 3 years that might have made things better.

What did you pick? No WHO pandemic declaration? A different PM or President? Effective media? More tolerant citizens?

Maybe you wondered, what if the world was more just? What if truth could really save us (quickly)?

This “what if” talk is, at its core, counterfactual thinking. We all do it. What if I had become an athlete, written more, scrolled less, married someone else?

Counterfactual thinking enables us to shift from perceiving the immediate environment to imagining a different one. It is key for learning from past experiences, planning and predicting (if I jump off the cliff, x is likely to happen), problem solving, innovation and creativity (maybe I’ll shift careers, arrange my kitchen drawers differently), and it is essential for improving an imperfect world. It also underpins moral emotions like regret and blame (I regret betraying my friend). Neurologically, counterfactual thinking depends on a network of systems for affective processing, mental stimulation, and cognitive control, and it is a symptom of a number of mental illnesses, including schizophrenia.

I don’t think it would be an exaggeration to say that we have lost our ability for counterfactual thinking en masse. But why did this happen? There are a lot of factors — with political ones at the top of the list — but one thing that surely contributed is that we lost a sense of play.

Yes, play. Let me explain. With a few exceptions, our culture has a pretty cynical view of the value of play. Even when we do it, we see play time as wasted and messy, allowing for an intolerable number of mistakes and the possibility of outcomes that don’t fit neatly into an existing framework. This messiness is a sign of weakness, and weakness is a threat to our tribal culture.

I think our culture is intolerant of play because it is intolerant of individuality and of distractions from the messaging we’re “supposed” to hear. It is also intolerant of joy, of anything that helps us to feel healthier, more alive, more focused and more jubilant. Furthermore, it doesn’t result in immediate, “concrete deliverables.”

But what if there was more play in science, in medicine and in politics? What if politicians said “What if we did x instead? Let’s just try out the idea?” What if, instead of your doctor writing a script for the “recommended” pharmaceutical, s/he said “What if you reduced your sugar intake… or… tried walking more? Let’s just try.”

“The stick that stirs the drink”

The non-superficiality of play is hardly a new idea. It was central to the development of the culture of Ancient Greece, one of the greatest civilizations in the world. It is telling that Greek words for play (paidia), children (paides) and education (paideia) have the same root. For the Greeks, play was essential not just to sport and theatre, but to ritual, music, and of course word play (rhetoric).

The Greek philosopher, Plato, saw play as deeply influential to the way children develop as adults. We can prevent social disorder, he wrote, by regulating the nature of children’s play. In his Laws, Plato proposed harnessing play for certain purposes: “If a boy is to be a good farmer or a good builder, he should play at building toy houses or at farming and be provided by his tutor with miniature tools modelled on real ones…One should see games as a means of directing children’s tastes and inclinations to the role they will fill as adults.”

Play is also the basis of the Socratic method, the back-and-forth technique of questioning and answering, trying things out, generating contradictions and imagining alternatives to find better hypotheses. Dialectic is essentially playing with ideas.

A number of contemporaries agree with Plato. The philosopher Colin McGinn wrote in 2008 that “Play is a vital part of any full life, and a person who never plays is worse than a ‘dull boy:’ he or she lacks imagination, humour and a proper sense of value. Only the bleakest and most life-denying Puritanism could warrant deleting all play from human life…..”

And Stuart Brown, founder of the National Institute for Play, wrote: “I don’t think it is too much to say that play can save your life. It certainly has salvaged mine. Life without play is a grinding, mechanical existence organized around doing things necessary for survival. Play is the stick that stirs the drink. It is the basis of all art, games, books, sports, movies, fashion, fun, and wonder — in short, the basis of what we think of as civilization.”

Education as Activity

Play is key but it’s not the only thing missing in modern education. The fact that we have lost it is a symptom, I think, of a more fundamental misunderstanding about what education is and is meant to do.

Let’s go back to the idea of education being an activity. Perhaps the most well-known quotation about education is “Education is not the filling of a pail, but the lighting of a fire.” It litters university recruitment pages, inspirational posters, mugs, and sweatshirts. Typically attributed to William Butler Yeats, the quotation is actually from Plutarch’s essay “On Listening” in which he writes “For the mind does not require filling like a bottle, but rather, like wood, it only requires kindling to create in it an impulse to think independently and an ardent desire for the truth.”

The way Plutarch contrasts learning with filling suggests that the latter was a common, but mistaken, idea. Strangely, we seem to have returned to the mistake and to the assumption that, once you get your bottle filled up, you are complete, you are educated. But if education is a kindling instead of a filling, how is the kindling achieved? How do you help to “create an impulse to think independently?” Let’s do another thought experiment.

If you knew that you could get away with anything, suffering no impunity, what would you do?

There is a story from Plato’s Republic, Book II (discussing the value of justice) that fleshes out this question. Plato describes a shepherd who stumbles upon a ring that grants him the ability to become invisible. He uses his invisibility to seduce the queen, kill her king, and take over the kingdom. Glaucon, one of the interlocutors in the dialogue, suggests that, if there were two such rings, one given to a just man, and the other to an unjust man, there would be no difference between them; they would both take advantage of the ring’s powers, suggesting that anonymity is the only barrier between a just and an unjust person.

Refuting Glaucon, Socrates says that the truly just person will do the right thing even with impunity because he understands the true benefits of acting justly.

Isn’t this the real goal of education, namely to create a well-balanced person who loves learning and justice for their own sakes? This person understands that the good life consists not in seeming but in being, in having a balanced inner self that takes pleasure in the right things because of an understanding of what they offer.

In the first book of his canonical ethical text, Aristotle (Plato’s student) asks what is the good life? What does it consist of? His answer is an obvious one: happiness. But his view of happiness is a bit different from ours. It is a matter of flourishing, which means functioning well according to your nature. And functioning well according to human nature is achieving excellence in reasoning, both intellectually and morally. The intellectual virtues (internal goods) include: scientific knowledge, technical knowledge, intuition, practical wisdom, and philosophical wisdom. The moral virtues include: justice, courage, and temperance.

For Aristotle, what our lives look like from the outside — wealth, health, status, social media likes, reputation — are all “external goods.” It’s not that these are unimportant but we need to understand their proper place in the good life. Having the internal and external goods in their right proportion is the only way to become an autonomous, self-governing, complete person.

It’s pretty clear that we aren’t flourishing as a people, especially if the following are any indication: Canada recently ranked 15th on the World Happiness Report, we have unprecedented levels of anxiety and mental illness, and in 2021 a children’s mental health crisis was declared and the NIH reported an unprecedented number of drug overdose deaths.

By contrast with most young people today, the person who is flourishing and complete will put less stock in the opinions of others, including institutions, because they will have more fully developed internal resources and they will be more likely to recognize when a group is making a bad decision. They will be less vulnerable to peer pressure and coercion, and they will have more to rely on if they do become ostracized from the group.

Educating with a view to the intellectual and moral virtues develops a lot of other things we are missing: research and inquiry skills, physical and mental agility, independent thinking, impulse control, resilience, patience and persistence, problem solving, self-regulation, endurance, self-confidence, self-satisfaction, joy, cooperation, collaboration, negotiation, empathy, and even the ability to put energy into a conversation.

What should be the goals of education? It’s pretty simple (in conception even if not in execution). At any age, for any subject matter, the only 2 goals of education are:

- To create a self-ruled (autonomous) person from the ‘inside out,’ who…

- Loves learning for its own sake

Education, in this view, is not passive and it is never complete. It is always in process, always open, always humble and humbling.

My students, unfortunately, were like the Republic’s shepherd; they measure the quality of their lives by what they can get away with, what their lives look like from the outside. But their lives, unfortunately, were like a shiny apple that, when you cut into it, is rotten on the inside. And their interior emptiness left them aimless, hopeless, dissatisfied and, unfortunately, miserable.

But it doesn’t have to be this way. Imagine what the world would be like if it were made up of self-ruled people. Would we be happier? Would we be healthier? Would we be more productive? Would we care less about measuring our productivity? My inclination is to think we would be much, much better off.

Self-governance has come under such relentless attack over the last few years because it encourages us to think for ourselves. And this attack didn’t begin recently nor did it emerge ex nihilo. John D. Rockefeller (who, ironically, co-founded the General Education Board in 1902) wrote, “I don’t want a nation of thinkers. I want a nation of workers.” His wish has largely come true.

The battle we are in is a battle over whether we will be slaves or masters, ruled or self-mastered. It is a battle over whether we will be unique or forced into a mold.

Thinking of students as identical to one another makes them substitutable, controllable and, ultimately, erasable. Moving forward, how do we avoid seeing ourselves as bottles to be filled by others? How do we embrace Plutarch’s exhortation to “create […] an impulse to think independently and an ardent desire for the truth?”

When it comes to education, isn’t that the question we must confront as we move through the strangest of times?

Brownstone Institute

The Unmasking of Vaccine Science

From the Brownstone Institute

By

I recently purchased Aaron Siri’s new book Vaccines, Amen. As I flipped though the pages, I noticed a section devoted to his now-famous deposition of Dr Stanley Plotkin, the “godfather” of vaccines.

I’d seen viral clips circulating on social media, but I had never taken the time to read the full transcript — until now.

Siri’s interrogation was methodical and unflinching…a masterclass in extracting uncomfortable truths.

A Legal Showdown

In January 2018, Dr Stanley Plotkin, a towering figure in immunology and co-developer of the rubella vaccine, was deposed under oath in Pennsylvania by attorney Aaron Siri.

The case stemmed from a custody dispute in Michigan, where divorced parents disagreed over whether their daughter should be vaccinated. Plotkin had agreed to testify in support of vaccination on behalf of the father.

What followed over the next nine hours, captured in a 400-page transcript, was extraordinary.

Plotkin’s testimony revealed ethical blind spots, scientific hubris, and a troubling indifference to vaccine safety data.

He mocked religious objectors, defended experiments on mentally disabled children, and dismissed glaring weaknesses in vaccine surveillance systems.

A System Built on Conflicts

From the outset, Plotkin admitted to a web of industry entanglements.

He confirmed receiving payments from Merck, Sanofi, GSK, Pfizer, and several biotech firms. These were not occasional consultancies but long-standing financial relationships with the very manufacturers of the vaccines he promoted.

Plotkin appeared taken aback when Siri questioned his financial windfall from royalties on products like RotaTeq, and expressed surprise at the “tone” of the deposition.

Siri pressed on: “You didn’t anticipate that your financial dealings with those companies would be relevant?”

Plotkin replied: “I guess, no, I did not perceive that that was relevant to my opinion as to whether a child should receive vaccines.”

The man entrusted with shaping national vaccine policy had a direct financial stake in its expansion, yet he brushed it aside as irrelevant.

Contempt for Religious Dissent

Siri questioned Plotkin on his past statements, including one in which he described vaccine critics as “religious zealots who believe that the will of God includes death and disease.”

Siri asked whether he stood by that statement. Plotkin replied emphatically, “I absolutely do.”

Plotkin was not interested in ethical pluralism or accommodating divergent moral frameworks. For him, public health was a war, and religious objectors were the enemy.

He also admitted to using human foetal cells in vaccine production — specifically WI-38, a cell line derived from an aborted foetus at three months’ gestation.

Siri asked if Plotkin had authored papers involving dozens of abortions for tissue collection. Plotkin shrugged: “I don’t remember the exact number…but quite a few.”

Plotkin regarded this as a scientific necessity, though for many people — including Catholics and Orthodox Jews — it remains a profound moral concern.

Rather than acknowledging such sensitivities, Plotkin dismissed them outright, rejecting the idea that faith-based values should influence public health policy.

That kind of absolutism, where scientific aims override moral boundaries, has since drawn criticism from ethicists and public health leaders alike.

As NIH director Jay Bhattacharya later observed during his 2025 Senate confirmation hearing, such absolutism erodes trust.

“In public health, we need to make sure the products of science are ethically acceptable to everybody,” he said. “Having alternatives that are not ethically conflicted with foetal cell lines is not just an ethical issue — it’s a public health issue.”

Safety Assumed, Not Proven

When the discussion turned to safety, Siri asked, “Are you aware of any study that compares vaccinated children to completely unvaccinated children?”

Plotkin replied that he was “not aware of well-controlled studies.”

Asked why no placebo-controlled trials had been conducted on routine childhood vaccines such as hepatitis B, Plotkin said such trials would be “ethically difficult.”

That rationale, Siri noted, creates a scientific blind spot. If trials are deemed too unethical to conduct, then gold-standard safety data — the kind required for other pharmaceuticals — simply do not exist for the full childhood vaccine schedule.

Siri pointed to one example: Merck’s hepatitis B vaccine, administered to newborns. The company had only monitored participants for adverse events for five days after injection.

Plotkin didn’t dispute it. “Five days is certainly short for follow-up,” he admitted, but claimed that “most serious events” would occur within that time frame.

Siri challenged the idea that such a narrow window could capture meaningful safety data — especially when autoimmune or neurodevelopmental effects could take weeks or months to emerge.

Siri pushed on. He asked Plotkin if the DTaP and Tdap vaccines — for diphtheria, tetanus and pertussis — could cause autism.

“I feel confident they do not,” Plotkin replied.

But when shown the Institute of Medicine’s 2011 report, which found the evidence “inadequate to accept or reject” a causal link between DTaP and autism, Plotkin countered, “Yes, but the point is that there were no studies showing that it does cause autism.”

In that moment, Plotkin embraced a fallacy: treating the absence of evidence as evidence of absence.

“You’re making assumptions, Dr Plotkin,” Siri challenged. “It would be a bit premature to make the unequivocal, sweeping statement that vaccines do not cause autism, correct?”

Plotkin relented. “As a scientist, I would say that I do not have evidence one way or the other.”

The MMR

The deposition also exposed the fragile foundations of the measles, mumps, and rubella (MMR) vaccine.

When Siri asked for evidence of randomised, placebo-controlled trials conducted before MMR’s licensing, Plotkin pushed back: “To say that it hasn’t been tested is absolute nonsense,” he said, claiming it had been studied “extensively.”

Pressed to cite a specific trial, Plotkin couldn’t name one. Instead, he gestured to his own 1,800-page textbook: “You can find them in this book, if you wish.”

Siri replied that he wanted an actual peer-reviewed study, not a reference to Plotkin’s own book. “So you’re not willing to provide them?” he asked. “You want us to just take your word for it?”

Plotkin became visibly frustrated.

Eventually, he conceded there wasn’t a single randomised, placebo-controlled trial. “I don’t remember there being a control group for the studies, I’m recalling,” he said.

The exchange foreshadowed a broader shift in public discourse, highlighting long-standing concerns that some combination vaccines were effectively grandfathered into the schedule without adequate safety testing.

In September this year, President Trump called for the MMR vaccine to be broken up into three separate injections.

The proposal echoed a view that Andrew Wakefield had voiced decades earlier — namely, that combining all three viruses into a single shot might pose greater risk than spacing them out.

Wakefield was vilified and struck from the medical register. But now, that same question — once branded as dangerous misinformation — is set to be re-examined by the CDC’s new vaccine advisory committee, chaired by Martin Kulldorff.

The Aluminium Adjuvant Blind Spot

Siri next turned to aluminium adjuvants — the immune-activating agents used in many childhood vaccines.

When asked whether studies had compared animals injected with aluminium to those given saline, Plotkin conceded that research on their safety was limited.

Siri pressed further, asking if aluminium injected into the body could travel to the brain. Plotkin replied, “I have not seen such studies, no, or not read such studies.”

When presented with a series of papers showing that aluminium can migrate to the brain, Plotkin admitted he had not studied the issue himself, acknowledging that there were experiments “suggesting that that is possible.”

Asked whether aluminium might disrupt neurological development in children, Plotkin stated, “I’m not aware that there is evidence that aluminum disrupts the developmental processes in susceptible children.”

Taken together, these exchanges revealed a striking gap in the evidence base.

Compounds such as aluminium hydroxide and aluminium phosphate have been injected into babies for decades, yet no rigorous studies have ever evaluated their neurotoxicity against an inert placebo.

This issue returned to the spotlight in September 2025, when President Trump pledged to remove aluminium from vaccines, and world-leading researcher Dr Christopher Exley renewed calls for its complete reassessment.

A Broken Safety Net

Siri then turned to the reliability of the Vaccine Adverse Event Reporting System (VAERS) — the primary mechanism for collecting reports of vaccine-related injuries in the United States.

Did Plotkin believe most adverse events were captured in this database?

“I think…probably most are reported,” he replied.

But Siri showed him a government-commissioned study by Harvard Pilgrim, which found that fewer than 1% of vaccine adverse events are reported to VAERS.

“Yes,” Plotkin said, backtracking. “I don’t really put much faith into the VAERS system…”

Yet this is the same database officials routinely cite to claim that “vaccines are safe.”

Ironically, Plotkin himself recently co-authored a provocative editorial in the New England Journal of Medicine, conceding that vaccine safety monitoring remains grossly “inadequate.”

Experimenting on the Vulnerable

Perhaps the most chilling part of the deposition concerned Plotkin’s history of human experimentation.

“Have you ever used orphans to study an experimental vaccine?” Siri asked.

“Yes,” Plotkin replied.

“Have you ever used the mentally handicapped to study an experimental vaccine?” Siri asked.

“I don’t recollect…I wouldn’t deny that I may have done so,” Plotkin replied.

Siri cited a study conducted by Plotkin in which he had administered experimental rubella vaccines to institutionalised children who were “mentally retarded.”

Plotkin stated flippantly, “Okay well, in that case…that’s what I did.”

There was no apology, no sign of ethical reflection — just matter-of-fact acceptance.

Siri wasn’t done.

He asked if Plotkin had argued that it was better to test on those “who are human in form but not in social potential” rather than on healthy children.

Plotkin admitted to writing it.

Siri established that Plotkin had also conducted vaccine research on the babies of imprisoned mothers, and on colonised African populations.

Plotkin appeared to suggest that the scientific value of such studies outweighed the ethical lapses—an attitude that many would interpret as the classic ‘ends justify the means’ rationale.

But that logic fails the most basic test of informed consent. Siri asked whether consent had been obtained in these cases.

“I don’t remember…but I assume it was,” Plotkin said.

Assume?

This was post-Nuremberg research. And the leading vaccine developer in America couldn’t say for sure whether he had properly informed the people he experimented on.

In any other field of medicine, such lapses would be disqualifying.

A Casual Dismissal of Parental Rights

Plotkin’s indifference to experimenting on disabled children didn’t stop there.

Siri asked whether someone who declined a vaccine due to concerns about missing safety data should be labelled “anti-vax.”

Plotkin replied, “If they refused to be vaccinated themselves or refused to have their children vaccinated, I would call them an anti-vaccination person, yes.”

Plotkin was less concerned about adults making that choice for themselves, but he had no tolerance for parents making those choices for their own children.

“The situation for children is quite different,” said Plotkin, “because one is making a decision for somebody else and also making a decision that has important implications for public health.”

In Plotkin’s view, the state held greater authority than parents over a child’s medical decisions — even when the science was uncertain.

The Enabling of Figures Like Plotkin

The Plotkin deposition stands as a case study in how conflicts of interest, ideology, and deference to authority have corroded the scientific foundations of public health.

Plotkin is no fringe figure. He is celebrated, honoured, and revered. Yet he promotes vaccines that have never undergone true placebo-controlled testing, shrugs off the failures of post-market surveillance, and admits to experimenting on vulnerable populations.

This is not conjecture or conspiracy — it is sworn testimony from the man who helped build the modern vaccine program.

Now, as Health Secretary Robert F. Kennedy, Jr. reopens long-dismissed questions about aluminium adjuvants and the absence of long-term safety studies, Plotkin’s once-untouchable legacy is beginning to fray.

Republished from the author’s Substack

Brownstone Institute

Bizarre Decisions about Nicotine Pouches Lead to the Wrong Products on Shelves

From the Brownstone Institute

A walk through a dozen convenience stores in Montgomery County, Pennsylvania, says a lot about how US nicotine policy actually works. Only about one in eight nicotine-pouch products for sale is legal. The rest are unauthorized—but they’re not all the same. Some are brightly branded, with uncertain ingredients, not approved by any Western regulator, and clearly aimed at impulse buyers. Others—like Sweden’s NOAT—are the opposite: muted, well-made, adult-oriented, and already approved for sale in Europe.

Yet in the United States, NOAT has been told to stop selling. In September 2025, the Food and Drug Administration (FDA) issued the company a warning letter for offering nicotine pouches without marketing authorization. That might make sense if the products were dangerous, but they appear to be among the safest on the market: mild flavors, low nicotine levels, and recyclable paper packaging. In Europe, regulators consider them acceptable. In America, they’re banned. The decision looks, at best, strange—and possibly arbitrary.

What the Market Shows

My October 2025 audit was straightforward. I visited twelve stores and recorded every distinct pouch product visible for sale at the counter. If the item matched one of the twenty ZYN products that the FDA authorized in January, it was counted as legal. Everything else was counted as illegal.

Two of the stores told me they had recently received FDA letters and had already removed most illegal stock. The other ten stores were still dominated by unauthorized products—more than 93 percent of what was on display. Across all twelve locations, about 12 percent of products were legal ZYN, and about 88 percent were not.

The illegal share wasn’t uniform. Many of the unauthorized products were clearly high-nicotine imports with flashy names like Loop, Velo, and Zimo. These products may be fine, but some are probably high in contaminants, and a few often with very high nicotine levels. Others were subdued, plainly meant for adult users. NOAT was a good example of that second group: simple packaging, oat-based filler, restrained flavoring, and branding that makes no effort to look “cool.” It’s the kind of product any regulator serious about harm reduction would welcome.

Enforcement Works

To the FDA’s credit, enforcement does make a difference. The two stores that received official letters quickly pulled their illegal stock. That mirrors the agency’s broader efforts this year: new import alerts to detain unauthorized tobacco products at the border (see also Import Alert 98-06), and hundreds of warning letters to retailers, importers, and distributors.

But effective enforcement can’t solve a supply problem. The list of legal nicotine-pouch products is still extremely short—only a narrow range of ZYN items. Adults who want more variety, or stores that want to meet that demand, inevitably turn to gray-market suppliers. The more limited the legal catalog, the more the illegal market thrives.

Why the NOAT Decision Appears Bizarre

The FDA’s own actions make the situation hard to explain. In January 2025, it authorized twenty ZYN products after finding that they contained far fewer harmful chemicals than cigarettes and could help adult smokers switch. That was progress. But nine months later, the FDA has approved nothing else—while sending a warning letter to NOAT, arguably the least youth-oriented pouch line in the world.

The outcome is bad for legal sellers and public health. ZYN is legal; a handful of clearly risky, high-nicotine imports continue to circulate; and a mild, adult-market brand that meets European safety and labeling rules is banned. Officially, NOAT’s problem is procedural—it lacks a marketing order. But in practical terms, the FDA is punishing the very design choices it claims to value: simplicity, low appeal to minors, and clean ingredients.

This approach also ignores the differences in actual risk. Studies consistently show that nicotine pouches have far fewer toxins than cigarettes and far less variability than many vapes. The biggest pouch concerns are uneven nicotine levels and occasional traces of tobacco-specific nitrosamines, depending on manufacturing quality. The serious contamination issues—heavy metals and inconsistent dosage—belong mostly to disposable vapes, particularly the flood of unregulated imports from China. Treating all “unauthorized” products as equally bad blurs those distinctions and undermines proportional enforcement.

A Better Balance: Enforce Upstream, Widen the Legal Path

My small Montgomery County survey suggests a simple formula for improvement.

First, keep enforcement targeted and focused on suppliers, not just clerks. Warning letters clearly change behavior at the store level, but the biggest impact will come from auditing distributors and importers, and stopping bad shipments before they reach retail shelves.

Second, make compliance easy. A single-page list of authorized nicotine-pouch products—currently the twenty approved ZYN items—should be posted in every store and attached to distributor invoices. Point-of-sale systems can block barcodes for anything not on the list, and retailers could affirm, once a year, that they stock only approved items.

Third, widen the legal lane. The FDA launched a pilot program in September 2025 to speed review of new pouch applications. That program should spell out exactly what evidence is needed—chemical data, toxicology, nicotine release rates, and behavioral studies—and make timely decisions. If products like NOAT meet those standards, they should be authorized quickly. Legal competition among adult-oriented brands will crowd out the sketchy imports far faster than enforcement alone.

The Bottom Line

Enforcement matters, and the data show it works—where it happens. But the legal market is too narrow to protect consumers or encourage innovation. The current regime leaves a few ZYN products as lonely legal islands in a sea of gray-market pouches that range from sensible to reckless.

The FDA’s treatment of NOAT stands out as a case study in inconsistency: a quiet, adult-focused brand approved in Europe yet effectively banned in the US, while flashier and riskier options continue to slip through. That’s not a public-health victory; it’s a missed opportunity.

If the goal is to help adult smokers move to lower-risk products while keeping youth use low, the path forward is clear: enforce smartly, make compliance easy, and give good products a fair shot. Right now, we’re doing the first part well—but failing at the second and third. It’s time to fix that.

-

National2 days ago

National2 days agoCanada’s free speech record is cracking under pressure

-

Energy1 day ago

Energy1 day agoTanker ban politics leading to a reckoning for B.C.

-

Energy1 day ago

Energy1 day agoMeet REEF — the massive new export engine Canadians have never heard of

-

Business2 days ago

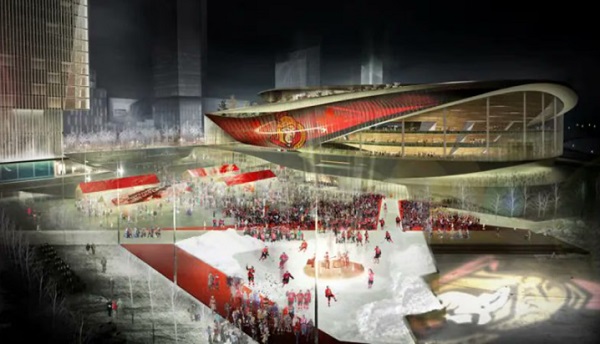

Business2 days agoTaxpayers Federation calls on politicians to reject funding for new Ottawa Senators arena

-

Fraser Institute1 day ago

Fraser Institute1 day agoClaims about ‘unmarked graves’ don’t withstand scrutiny

-

Censorship Industrial Complex2 days ago

Censorship Industrial Complex2 days agoOttawa’s New Hate Law Goes Too Far

-

Business2 days ago

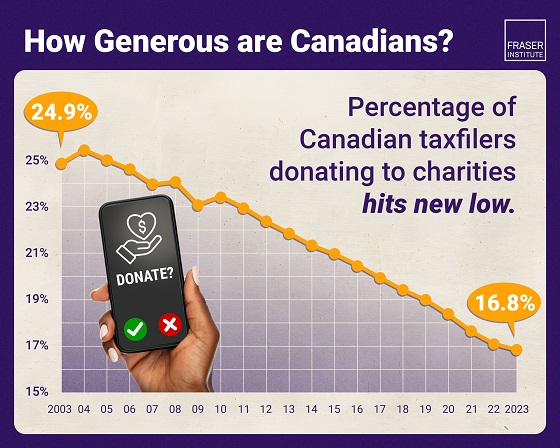

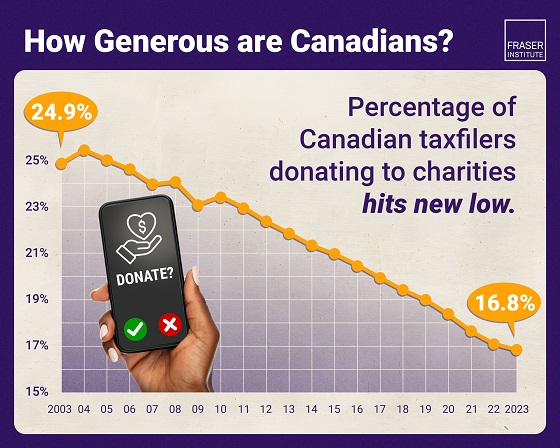

Business2 days agoAlbertans give most on average but Canadian generosity hits lowest point in 20 years

-

Business1 day ago

Business1 day agoToo nice to fight, Canada’s vulnerability in the age of authoritarian coercion