Digital ID

The End of Online Anonymity? Australia’s New Law Pushes Digital ID for Everyone To Ban Kids From Social Media

|

Australia is gearing up to roll out some of the world’s strictest social media rules, with Parliament having pushed through legislation to bar anyone under 16 from creating accounts on platforms like Facebook, Instagram, Snapchat, and TikTok. It’s a sweeping measure but, as the ink dries, the questions are piling up.

Prime Minister Anthony Albanese’s Labor government and the opposition teamed up on Thursday to pass the new restrictions with bipartisan enthusiasm. And why not? Opinion polls show a whopping 77% of Australians are behind the idea. Protecting kids online is an easy sell which is why it’s often used to usher in the most draconian of laws. Still, the devil—as always—is in the details. Proof of Age, But at What Cost? |

|

|

Here’s the crux of the new law: to use social media, Australians will need to prove they’re old enough. That means showing ID, effectively ending the anonymity that’s long been a feature (or flaw, depending on your perspective) of the online experience. In theory, this makes sense—keeping kids out of online spaces designed for adults is hardly controversial. But in practice, it’s like using a sledgehammer to crack a walnut.

For one, there’s no clear blueprint for how this will work. Will social media platforms require passports and birth certificates at sign-up? Who’s going to handle and secure this flood of personal information? The government hasn’t offered much clarity and, until it does, the logistics look shaky. And then there’s the matter of enforcement. Teenagers are famously tech-savvy, and history has shown that banning them from a platform is more of a speed bump than a roadblock. With VPNs, fake IDs, and alternate accounts already standard fare for navigating internet restrictions, how effective can this law really be? The Hasty Debate |

|

|

Critics on both sides of Parliament flagged concerns about the speed with which this legislation moved forward. But the Albanese government pressed ahead, arguing that urgent action was needed to protect young people. Their opponents in the Liberal-National coalition, not wanting to appear soft on tech regulation, fell in line. The result? A law that feels more like a political statement than a well-thought-out policy.

There’s no denying the appeal of bold action on Big Tech. Headlines about online predators and harmful content make it easy to rally public support. But there’s a fine line between decisive governance and reactionary policymaking. Big Questions, Few Answers The most glaring issue is privacy. Forcing users to hand over ID to access social media opens up a Pandora’s box of security concerns. Centralizing sensitive personal data creates a tempting target for hackers, and Australia’s track record with large-scale data breaches isn’t exactly reassuring. There’s also the question of what happens when kids inevitably find workarounds. Locking them out of mainstream platforms doesn’t mean they’ll stop using the internet—it just pushes them into less regulated, potentially more harmful digital spaces. Is that really a win for online safety? A Global Watch Party Australia’s bold move is already drawing attention from abroad. Governments worldwide are grappling with how to regulate social media, and this legislation could set a precedent. But whether it becomes a model for others or a cautionary tale remains to be seen. For now, the Albanese government has delivered a strong message: protecting children online is a priority. But the lack of clear answers about enforcement and privacy leaves the impression that this is a solution in search of a strategy. All on the Platforms Under the new social media law, the responsibility for enforcement doesn’t rest with the government, but with the very companies it targets. Platforms like Facebook, TikTok, and Instagram will be tasked with ensuring no Australian under 16 manages to slip through the digital gates. If they fail? They’ll face fines of up to A$50 million (about $32.4 million USD). That’s a steep price for failing to solve a problem the government itself hasn’t figured out how to address. The legislation offers little in the way of specifics, leaving tech giants to essentially guess how they’re supposed to pull off this feat. The law vaguely mentions taking “reasonable steps” to verify age but skips the critical part: defining what “reasonable” means. The Industry Pushback Tech companies, predictably, are not thrilled. Meta, in its submission to a Senate inquiry, called the law “rushed” and out of touch with the current limitations of age-verification technology. “The social media ban overlooks the practical reality of age assurance technology,” Meta argued. Translation? The tools to make this work either don’t exist or aren’t reliable enough to enforce at scale. X didn’t hold back either. The platform warned of potential misuse of the sweeping powers the legislation grants to the minister for communications. X CEO Linda Yaccarino’s team even raised concerns that these powers could be used to curb free speech — another way of saying that regulating who gets to log on could quickly evolve into regulating what they’re allowed to say. And it’s not just the tech companies pushing back. The Human Rights Law Centre questioned the lawfulness of the bill, highlighting how it opens the door to intrusive data collection while offering no safeguards against abuse. Promises, Assurances, and Ambiguities The government insists it won’t force people to hand over passports, licenses, or tap into the contentious new digital ID system to prove their age. But here’s the catch: there’s nothing in the current law explicitly preventing that, either. The government is effectively asking Australians to trust that these measures won’t lead to broader surveillance—even as the legislation creates the infrastructure to make it possible. This uncertainty was laid bare during the bill’s rushed four-hour review. Liberal National Senator Matt Canavan pressed for clarity, and while the Coalition managed to extract a promise for amendments preventing platforms from demanding IDs outright, it still feels like a band-aid on an otherwise sprawling mess. A Law in Search of a Strategy Part of the problem is that the government itself doesn’t seem entirely sure how this law will work. A trial of age-assurance technology is planned for mid-2025—long after the law is expected to take effect. The communications minister, Michelle Rowland, will ultimately decide what enforcement methods apply to which platforms, wielding what critics describe as “expansive” and potentially unchecked authority. It’s a power dynamic that brings to mind a comment from Rowland’s predecessor, Stephen Conroy, who once bragged about his ability to make telecommunications companies “wear red underpants on [their] head” if he so desired. Tech companies now face the unenviable task of interpreting a vague law while bracing for whatever decisions the minister might make in the future. The list of platforms affected by the law is another moving target. Government officials have dropped hints in interviews—YouTube, for example, might not make the cut—but these decisions will ultimately be left to the minister. This pick-and-choose approach adds another layer of uncertainty, leaving tech companies and users alike guessing at what’s coming next. The Bigger Picture The debate around this legislation is as much about philosophy as it is about enforcement. On one hand, the government is trying to address legitimate concerns about children’s safety online. On the other, it’s doing so in a way that raises serious questions about privacy, free speech, and the limits of state power over the digital realm. Australia’s experiment could become a model for other countries grappling with the same challenges—or a cautionary tale of what happens when governments legislate without a clear plan. For now, the only certainty is uncertainty. In a year’s time, Australians might find themselves proving their age every time they try to log in—or watching the system collapse under the weight of its own contradictions. |

Censorship Industrial Complex

China announces “improvements” to social credit system

MxM News

MxM News

Quick Hit:

Beijing released new guidelines Monday to revamp its social credit system, promising stronger information controls while deepening the system’s reach across China’s economy and society. Critics say the move reinforces the Communist Party’s grip under the banner of “market efficiency.”

Key Details:

- The guideline was issued by top Chinese government and Communist Party offices, listing 23 measures to expand and standardize the social credit system.

- It aims to integrate the credit system across all sectors of China’s economy to support what Beijing calls “high-quality development.”

- Officials claim the new framework will respect information security and individual rights—despite growing global concerns over surveillance and state overreach.

Diving Deeper:

China is doubling down on its social credit system with a newly issued guideline meant to “improve” and expand the controversial surveillance-driven program. Released by both the Communist Party’s Central Committee and the State Council, the document outlines 23 specific measures aimed at building a unified national credit system that will touch nearly every corner of Chinese society.

Framed as a tool for “high-quality development,” the guideline declares that credit assessments will increasingly shape the rules of engagement for businesses, government agencies, and individual citizens. The system, according to the National Development and Reform Commission (NDRC), has already played a role in shaping China’s financial services, government efficiency, and business environment.

Critics of the social credit system have long warned that it serves as an instrument of authoritarian control—monitoring citizens’ behavior, punishing dissent, and rewarding obedience to the Communist Party. By integrating credit data across all sectors and enforcing a “shared benefits” model, the new guideline appears to entrench, not ease, the Party’s involvement in everyday life.

Still, Beijing is attempting to temper foreign and domestic concerns over privacy. The NDRC emphasized that the system is being built on the “fundamental principle” of protecting personal data. Officials pledged to avoid excessive data collection and crack down on any unlawful use of information.

Digital ID

Wales Becomes First UK Testbed for Citywide AI-Powered Facial Recognition Surveillance

-

2025 Federal Election2 days ago

2025 Federal Election2 days agoRCMP Whistleblowers Accuse Members of Mark Carney’s Inner Circle of Security Breaches and Surveillance

-

Daily Caller21 hours ago

Daily Caller21 hours agoTrump Executive Orders ensure ‘Beautiful Clean’ Affordable Coal will continue to bolster US energy grid

-

2025 Federal Election2 days ago

2025 Federal Election2 days agoBureau Exclusive: Chinese Election Interference Network Tied to Senate Breach Investigation

-

Business23 hours ago

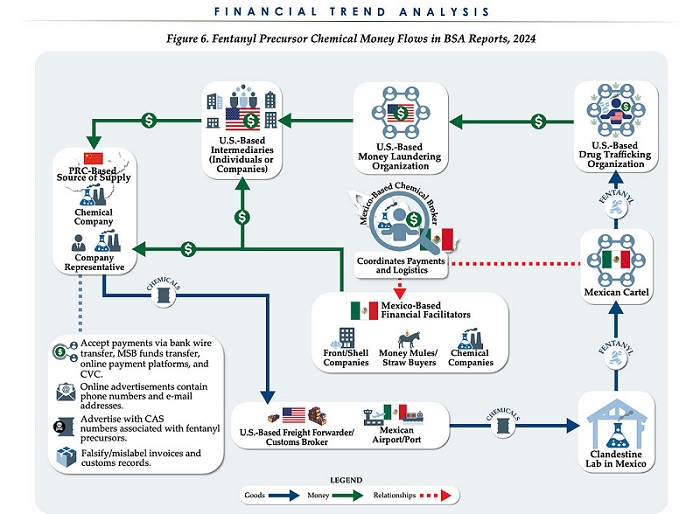

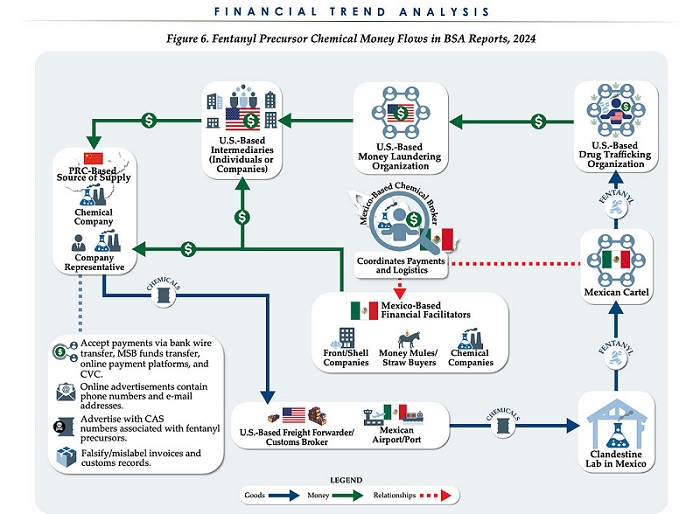

Business23 hours agoChina, Mexico, Canada Flagged in $1.4 Billion Fentanyl Trade by U.S. Financial Watchdog

-

2025 Federal Election21 hours ago

2025 Federal Election21 hours agoBREAKING from THE BUREAU: Pro-Beijing Group That Pushed Erin O’Toole’s Exit Warns Chinese Canadians to “Vote Carefully”

-

2025 Federal Election1 day ago

2025 Federal Election1 day agoTucker Carlson Interviews Maxime Bernier: Trump’s Tariffs, Mass Immigration, and the Oncoming Canadian Revolution

-

2025 Federal Election20 hours ago

2025 Federal Election20 hours agoAllegations of ethical misconduct by the Prime Minister and Government of Canada during the current federal election campaign

-

COVID-1917 hours ago

COVID-1917 hours agoTamara Lich and Chris Barber trial update: The Longest Mischief Trial of All Time continues..