Brownstone Institute

Why Is Our Education System Failing to Educate?

From the Brownstone Institute

BY

I suspect many of you know my story. But, for those who don’t, the short version is that I taught philosophy — ethics and ancient philosophy, in particular — at Western University in Canada until September 2021 when I was very publicly terminated “with cause” for refusing to comply with Western’s COVID-19 policy.

What I did — question, critically evaluate and, ultimately, challenge what we now call “the narrative” — is risky behaviour. It got me fired, labeled an “academic pariah,” chastised by mainstream media, and vilified by my peers. But this ostracization and vilification, it turns out, was just a symptom of a shift towards a culture of silence, nihilism, and mental atrophy that had been brewing for a long time.

You know that parental rhetorical question, “So if everyone jumped off a cliff, would you do it too?” It turns out that most would jump at the rate of about 90 percent and that most of the 90 percent wouldn’t ask any questions about the height of the cliff, alternative options, accommodations for the injured, etc. What was supposed to be a cautionary rhetorical joke has become the modus operandi of the Western world.

Admittedly, I am a bit of an odd choice as the keynote speaker for an education conference. I have no specialized training in the philosophy of education or in pedagogy. In graduate school, you receive little formal instruction about how to teach. You learn by experience, research, trial by fire, and by error. And, of course, I was terminated from my position as a university teacher. But I do think a lot about education. I look at how many people are willing to outsource their thinking and I wonder, what went wrong? Confronted with the products of our public school system every day for 20 years, I wonder what went wrong? And, finally, as the mother of a 2-year-old, I think a lot about what happens in the early years to encourage a better outcome than we are seeing today.

My aim today is to talk a bit about what I saw in university students during my teaching career, why I think the education system failed them, and the only two basic skills any student at any age really needs.

Let’s start by doing something I used to do regularly in class, something some students loved and others hated. Let’s brainstorm some answers to this question: What does it mean to “be educated?”

[Answers from the audience included: “to acquire knowledge,” “to learn the truth,” “to develop a set of required skills,” “to get a degree.”]

Many answers were admirable but I noticed that most describe education passively: “to be educated,” “to get a degree,” “to be informed” are all passive verbs.

When it comes to writing, we are often told to use the active voice. It is clearer, more emphatic, and creates greater emotional impact. And yet the predominant way we describe education is passive. But is education really a passive experience? Is it something that just happens to us like getting rained on or being scratched by a cat? And do you need to be acted on by someone else in order to become educated? Or is education a more active, personal, emphatic and impactful experience? Might “I am educating,” “I am learning” be more accurate descriptions?

My experience in the classroom was certainly consistent with thinking of education as a passive experience. Over the years, I saw an increasing trend towards timidity, conformity and apathy, all signs of educational passivity. But this was a strict departure from the university culture that met me as an undergraduate in the mid-90s.

As an undergraduate, my classes were robust theaters of The Paper Chase-style effervescent debate. But there was a palpable shift sometime in the late 90s. A hush fell over the classroom. Topics once relied on to ignite discussion — abortion, slavery, capital punishment — no longer held the same appeal. Fewer and fewer hands went up. Students trembled at the thought of being called on and, when they did speak, they parroted a set of ‘safe’ ideas and frequently used “of course” to refer to ideas that would allow them to safely navigate the Scylla and Charybdis of topics considered to be off-limits by the woke zealots.

The stakes are even higher now. Students who question or refuse to comply are rejected or de-enrolled. Recently, an Ontario university student was suspended for asking for a definition of “colonialism.” Merely asking for clarification in the 21st century is academic heresy. Professors like myself are punished or terminated for speaking out, and our universities are becoming increasingly closed systems in which autonomous thought is a threat to the neoliberal groupthink model of ‘education.’

I spent some time thinking in concrete terms about the traits I saw in the novel, 21st century student. With some exception, most students suffer from the following symptoms of our educational failure. They are (for the most part):

- “Information-focused,” not “wisdom-interested:” they are computational, able to input and output information (more or less), but lack the critical ability to understand why they are doing so or to manipulate the data in unique ways.

- Science and technology worshipping: they treat STEM (science, technology, engineering and mathematics) as a god, as an end in itself rather than an instrument to achieve some end.

- Intolerant of uncertainty, complications, gray areas, open questions, and they are generally unable to formulate questions themselves.

- Apathetic, unhappy, even miserable (and I’m not sure they ever felt otherwise so they may not recognize these states for what they are).

- Increasingly unable to engage in counterfactual thinking. (I will return to this idea in a moment.)

- Instrumentalist: everything they do is for the sake of something else.

To elaborate on this last point, when I used to ask my students why they were at university, the following sort of conversation would usually ensue:

Why did you come to university?

To get a degree.

Why?

So I can get into law school (nursing or some other impressive post-graduate program).

Why?

So I can get a good job.

Why?

The well of reflex answers typically started to dry up that point. Some were honest that the lure of a “good job” was to attain money or a certain social status; others seemed genuinely perplexed by the question or would simply say: “My parents tell me I should,” “My friends are all doing it,” or “Society expects it.”

Being an instrumentalist about education means that you see it as valuable only as a way to obtain some further, non-educational good. Again, the passivity is palpable. In this view, education is something that gets poured into you. Once you get enough poured in, it’s time to graduate and unlock the door to the next life prize. But this makes education, for its own sake, meaningless and substitutable. Why not just buy the subject-specific microchip when it becomes available and avoid all the unpleasant studying, questioning, self-reflection, and skill-building?

Time has shown us where this instrumentalism has gotten us: we live in an era of pseudo-intellectuals, pseudo-students and pseudo-education, each of us becoming increasingly less clear why we need education (of the sort offered by our institutions) , or how it’s helping to create a better world.

Why the change? How did intellectual curiosity and critical thinking get trained out of our universities? It’s complex but there are three factors that surely contributed:

- Universities became businesses. They became corporate entities with boards of governors, customers and ad campaigns. In early 2021, Huron College (where I worked) appointed its first board of governors with members from Rogers, Sobeys, and EllisDon, a move author Christopher Newfield calls the “great mistake.” Regulatory capture (of the sort that led the University of Toronto to partner with Moderna) is just one consequence of this collusion.

- Education became a commodity. Education is treated as a purchasable, exchangeable good, which fits well with the idea that education is something that can be downloaded to anyone’s empty mind. There is an implicit assumption of equality and mediocrity, here; you must believe that every student is roughly the same in skill, aptitude, interest, etc. to be able to be filled this way.

- We mistook information for wisdom. Our inheritance from the Enlightenment, the idea that reason will allow us to conquer all, has morphed into information ownership and control. We need to appear informed to seem educated, and we shun the uninformed or misinformed. We align with the most acceptable source of information and forego any critical assessment of how they attained that information. But this isn’t wisdom. Wisdom goes beyond information; it pivots on a sense of care, attention, and context, allowing us to sift through a barrage of information, selecting and acting only on the truly worthy.

This is a radical departure from the earliest universities, which began in the 4th century BC: Plato teaching in the grove of Academus, Epicurus in his private garden. When they met to discuss, there were no corporate partnerships, no boards of directors. They were drawn together by a shared love of questioning and problem-solving.

Out of these early universities was born the concept of liberal arts — grammar, logic, rhetoric, arithmetic, geometry, music and astronomy — studies which are “liberal” not because they are easy or unserious, but because they are suitable for those who are free (liberalis), as opposed to slaves or animals. In the era before SME’s (subject matter experts), these are the subjects thought to be essential preparation for becoming a good, well-informed citizen who is an effective participant in public life.

In this view, education is not something you receive and certainly not something you buy; it is a disposition, a way of life you create for yourself grounded in what Dewey called “skilled powers of thinking.” It helps you to become questioning, critical, curious, creative, humble and, ideally, wise.

The Lost Art of Counterfactual Thinking

I said earlier that I would return to the subject of counterfactual thinking, what it is, why it’s been lost and why it’s important. And I would like to start with another thought experiment: close your eyes and think about one thing that might have been different over the last 3 years that might have made things better.

What did you pick? No WHO pandemic declaration? A different PM or President? Effective media? More tolerant citizens?

Maybe you wondered, what if the world was more just? What if truth could really save us (quickly)?

This “what if” talk is, at its core, counterfactual thinking. We all do it. What if I had become an athlete, written more, scrolled less, married someone else?

Counterfactual thinking enables us to shift from perceiving the immediate environment to imagining a different one. It is key for learning from past experiences, planning and predicting (if I jump off the cliff, x is likely to happen), problem solving, innovation and creativity (maybe I’ll shift careers, arrange my kitchen drawers differently), and it is essential for improving an imperfect world. It also underpins moral emotions like regret and blame (I regret betraying my friend). Neurologically, counterfactual thinking depends on a network of systems for affective processing, mental stimulation, and cognitive control, and it is a symptom of a number of mental illnesses, including schizophrenia.

I don’t think it would be an exaggeration to say that we have lost our ability for counterfactual thinking en masse. But why did this happen? There are a lot of factors — with political ones at the top of the list — but one thing that surely contributed is that we lost a sense of play.

Yes, play. Let me explain. With a few exceptions, our culture has a pretty cynical view of the value of play. Even when we do it, we see play time as wasted and messy, allowing for an intolerable number of mistakes and the possibility of outcomes that don’t fit neatly into an existing framework. This messiness is a sign of weakness, and weakness is a threat to our tribal culture.

I think our culture is intolerant of play because it is intolerant of individuality and of distractions from the messaging we’re “supposed” to hear. It is also intolerant of joy, of anything that helps us to feel healthier, more alive, more focused and more jubilant. Furthermore, it doesn’t result in immediate, “concrete deliverables.”

But what if there was more play in science, in medicine and in politics? What if politicians said “What if we did x instead? Let’s just try out the idea?” What if, instead of your doctor writing a script for the “recommended” pharmaceutical, s/he said “What if you reduced your sugar intake… or… tried walking more? Let’s just try.”

“The stick that stirs the drink”

The non-superficiality of play is hardly a new idea. It was central to the development of the culture of Ancient Greece, one of the greatest civilizations in the world. It is telling that Greek words for play (paidia), children (paides) and education (paideia) have the same root. For the Greeks, play was essential not just to sport and theatre, but to ritual, music, and of course word play (rhetoric).

The Greek philosopher, Plato, saw play as deeply influential to the way children develop as adults. We can prevent social disorder, he wrote, by regulating the nature of children’s play. In his Laws, Plato proposed harnessing play for certain purposes: “If a boy is to be a good farmer or a good builder, he should play at building toy houses or at farming and be provided by his tutor with miniature tools modelled on real ones…One should see games as a means of directing children’s tastes and inclinations to the role they will fill as adults.”

Play is also the basis of the Socratic method, the back-and-forth technique of questioning and answering, trying things out, generating contradictions and imagining alternatives to find better hypotheses. Dialectic is essentially playing with ideas.

A number of contemporaries agree with Plato. The philosopher Colin McGinn wrote in 2008 that “Play is a vital part of any full life, and a person who never plays is worse than a ‘dull boy:’ he or she lacks imagination, humour and a proper sense of value. Only the bleakest and most life-denying Puritanism could warrant deleting all play from human life…..”

And Stuart Brown, founder of the National Institute for Play, wrote: “I don’t think it is too much to say that play can save your life. It certainly has salvaged mine. Life without play is a grinding, mechanical existence organized around doing things necessary for survival. Play is the stick that stirs the drink. It is the basis of all art, games, books, sports, movies, fashion, fun, and wonder — in short, the basis of what we think of as civilization.”

Education as Activity

Play is key but it’s not the only thing missing in modern education. The fact that we have lost it is a symptom, I think, of a more fundamental misunderstanding about what education is and is meant to do.

Let’s go back to the idea of education being an activity. Perhaps the most well-known quotation about education is “Education is not the filling of a pail, but the lighting of a fire.” It litters university recruitment pages, inspirational posters, mugs, and sweatshirts. Typically attributed to William Butler Yeats, the quotation is actually from Plutarch’s essay “On Listening” in which he writes “For the mind does not require filling like a bottle, but rather, like wood, it only requires kindling to create in it an impulse to think independently and an ardent desire for the truth.”

The way Plutarch contrasts learning with filling suggests that the latter was a common, but mistaken, idea. Strangely, we seem to have returned to the mistake and to the assumption that, once you get your bottle filled up, you are complete, you are educated. But if education is a kindling instead of a filling, how is the kindling achieved? How do you help to “create an impulse to think independently?” Let’s do another thought experiment.

If you knew that you could get away with anything, suffering no impunity, what would you do?

There is a story from Plato’s Republic, Book II (discussing the value of justice) that fleshes out this question. Plato describes a shepherd who stumbles upon a ring that grants him the ability to become invisible. He uses his invisibility to seduce the queen, kill her king, and take over the kingdom. Glaucon, one of the interlocutors in the dialogue, suggests that, if there were two such rings, one given to a just man, and the other to an unjust man, there would be no difference between them; they would both take advantage of the ring’s powers, suggesting that anonymity is the only barrier between a just and an unjust person.

Refuting Glaucon, Socrates says that the truly just person will do the right thing even with impunity because he understands the true benefits of acting justly.

Isn’t this the real goal of education, namely to create a well-balanced person who loves learning and justice for their own sakes? This person understands that the good life consists not in seeming but in being, in having a balanced inner self that takes pleasure in the right things because of an understanding of what they offer.

In the first book of his canonical ethical text, Aristotle (Plato’s student) asks what is the good life? What does it consist of? His answer is an obvious one: happiness. But his view of happiness is a bit different from ours. It is a matter of flourishing, which means functioning well according to your nature. And functioning well according to human nature is achieving excellence in reasoning, both intellectually and morally. The intellectual virtues (internal goods) include: scientific knowledge, technical knowledge, intuition, practical wisdom, and philosophical wisdom. The moral virtues include: justice, courage, and temperance.

For Aristotle, what our lives look like from the outside — wealth, health, status, social media likes, reputation — are all “external goods.” It’s not that these are unimportant but we need to understand their proper place in the good life. Having the internal and external goods in their right proportion is the only way to become an autonomous, self-governing, complete person.

It’s pretty clear that we aren’t flourishing as a people, especially if the following are any indication: Canada recently ranked 15th on the World Happiness Report, we have unprecedented levels of anxiety and mental illness, and in 2021 a children’s mental health crisis was declared and the NIH reported an unprecedented number of drug overdose deaths.

By contrast with most young people today, the person who is flourishing and complete will put less stock in the opinions of others, including institutions, because they will have more fully developed internal resources and they will be more likely to recognize when a group is making a bad decision. They will be less vulnerable to peer pressure and coercion, and they will have more to rely on if they do become ostracized from the group.

Educating with a view to the intellectual and moral virtues develops a lot of other things we are missing: research and inquiry skills, physical and mental agility, independent thinking, impulse control, resilience, patience and persistence, problem solving, self-regulation, endurance, self-confidence, self-satisfaction, joy, cooperation, collaboration, negotiation, empathy, and even the ability to put energy into a conversation.

What should be the goals of education? It’s pretty simple (in conception even if not in execution). At any age, for any subject matter, the only 2 goals of education are:

- To create a self-ruled (autonomous) person from the ‘inside out,’ who…

- Loves learning for its own sake

Education, in this view, is not passive and it is never complete. It is always in process, always open, always humble and humbling.

My students, unfortunately, were like the Republic’s shepherd; they measure the quality of their lives by what they can get away with, what their lives look like from the outside. But their lives, unfortunately, were like a shiny apple that, when you cut into it, is rotten on the inside. And their interior emptiness left them aimless, hopeless, dissatisfied and, unfortunately, miserable.

But it doesn’t have to be this way. Imagine what the world would be like if it were made up of self-ruled people. Would we be happier? Would we be healthier? Would we be more productive? Would we care less about measuring our productivity? My inclination is to think we would be much, much better off.

Self-governance has come under such relentless attack over the last few years because it encourages us to think for ourselves. And this attack didn’t begin recently nor did it emerge ex nihilo. John D. Rockefeller (who, ironically, co-founded the General Education Board in 1902) wrote, “I don’t want a nation of thinkers. I want a nation of workers.” His wish has largely come true.

The battle we are in is a battle over whether we will be slaves or masters, ruled or self-mastered. It is a battle over whether we will be unique or forced into a mold.

Thinking of students as identical to one another makes them substitutable, controllable and, ultimately, erasable. Moving forward, how do we avoid seeing ourselves as bottles to be filled by others? How do we embrace Plutarch’s exhortation to “create […] an impulse to think independently and an ardent desire for the truth?”

When it comes to education, isn’t that the question we must confront as we move through the strangest of times?

Brownstone Institute

If the President in the White House can’t make changes, who’s in charge?

From the Brownstone Institute

By

Who Controls the Administrative State?

President Trump on March 20, 2025, ordered the following: “The Secretary of Education shall, to the maximum extent appropriate and permitted by law, take all necessary steps to facilitate the closure of the Department of Education.”

That is interesting language: to “take all necessary steps to facilitate the closure” is not the same as closing it. And what is “permitted by law” is precisely what is in dispute.

It is meant to feel like abolition, and the media reported it as such, but it is not even close. This is not Trump’s fault. The supposed authoritarian has his hands tied in many directions, even over agencies he supposedly controls, the actions of which he must ultimately bear responsibility.

The Department of Education is an executive agency, created by Congress in 1979. Trump wants it gone forever. So do his voters. Can he do that? No but can he destaff the place and scatter its functions? No one knows for sure. Who decides? Presumably the highest court, eventually.

How this is decided – whether the president is actually in charge or really just a symbolic figure like the King of Sweden – affects not just this one destructive agency but hundreds more. Indeed, the fate of the whole of freedom and functioning of constitutional republics may depend on the answer.

All burning questions of politics today turn on who or what is in charge of the administrative state. No one knows the answer and this is for a reason. The main functioning of the modern state falls to a beast that does not exist in the Constitution.

The public mind has never had great love for bureaucracies. Consistent with Max Weber’s worry, they have put society in an impenetrable “iron cage” built of bloodless rationalism, needling edicts, corporatist corruption, and never-ending empire-building checked by neither budgetary restraint nor plebiscite.

Today’s full consciousness of the authority and ubiquity of the administrative state is rather new. The term itself is a mouthful and doesn’t come close to describing the breadth and depth of the problem, including its root systems and retail branches. The new awareness is that neither the people nor their elected representatives are really in charge of the regime under which we live, which betrays the whole political promise of the Enlightenment.

This dawning awareness is probably 100 years late. The machinery of what is popularly known as the “deep state” – I’ve argued there are deep, middle, and shallow layers – has been growing in the US since the inception of the civil service in 1883 and thoroughly entrenched over two world wars and countless crises at home and abroad.

The edifice of compulsion and control is indescribably huge. No one can agree precisely on how many agencies there are or how many people work for them, much less how many institutions and individuals work on contract for them, either directly or indirectly. And that is just the public face; the subterranean branch is far more elusive.

The revolt against them all came with the Covid controls, when everyone was surrounded on all sides by forces outside our purview and about which the politicians knew not much at all. Then those same institutional forces appear to be involved in overturning the rule of a very popular politician whom they tried to stop from gaining a second term.

The combination of this series of outrages – what Jefferson in his Declaration called “a long train of abuses and usurpations, pursuing invariably the same Object” – has led to a torrent of awareness. This has translated into political action.

A distinguishing mark of Trump’s second term has been an optically concerted effort, at least initially, to take control of and then curb administrative state power, more so than any executive in living memory. At every step in these efforts, there has been some barrier, even many on all sides.

There are at least 100 legal challenges making their way through courts. District judges are striking down Trump’s ability to fire workers, redirect funding, curb responsibilities, and otherwise change the way they do business.

Even the signature early achievement of DOGE – the shuttering of USAID – has been stopped by a judge with an attempt to reverse it. A judge has even dared tell the Trump administration who it can and cannot hire at USAID.

Not a day goes by when the New York Times does not manufacture some maudlin defense of the put-upon minions of the tax-funded managerial class. In this worldview, the agencies are always right, whereas any elected or appointed person seeking to rein them in or terminate them is attacking the public interest.

After all, as it turns out, legacy media and the administrative state have worked together for at least a century to cobble together what was conventionally called “the news.” Where would the NYT or the whole legacy media otherwise be?

So ferocious has been the pushback against even the paltry successes and often cosmetic reforms of MAGA/MAHA/DOGE that vigilantes have engaged in terrorism against Teslas and their owners. Not even returning astronauts from being “lost in space” has redeemed Elon Musk from the wrath of the ruling class. Hating him and his companies is the “new thing” for NPCs, on a long list that began with masks, shots, supporting Ukraine, and surgical rights for gender dysphoria.

What is really at stake, more so than any issue in American life (and this applies to states around the world) – far more than any ideological battles over left and right, red and blue, or race and class – is the status, power, and security of the administrative state itself and all its works.

We claim to support democracy yet all the while, empires of command-and-control have arisen among us. The victims have only one mechanism available to fight back: the vote. Can that work? We do not yet know. This question will likely be decided by the highest court.

All of which is awkward. It is impossible to get around this US government organizational chart. All but a handful of agencies live under the category of the executive branch. Article 2, Section 1, says: “The executive Power shall be vested in a President of the United States of America.”

Does the president control the whole of the executive branch in a meaningful way? One would think so. It’s impossible to understand how it could be otherwise. The chief executive is…the chief executive. He is held responsible for what these agencies do – we certainly blasted away at the Trump administration in the first term for everything that happened under his watch. In that case, and if the buck really does stop at the Oval Office desk, the president must have some modicum of control beyond the ability to tag a marionette to get the best parking spot at the agency.

What is the alternative to presidential oversight and management of the agencies listed in this branch of government? They run themselves? That claim means nothing in practice.

For an agency to be deemed “independent” turns out to mean codependency with the industries regulated, subsidized, penalized, or otherwise impacted by its operations. HUD does housing development, FDA does pharmaceuticals, DOA does farming, DOL does unions, DOE does oil and turbines, DOD does tanks and bombs, FAA does airlines, and so on It goes forever.

That’s what “independence” means in practice: total acquiescence to industrial cartels, trade groups, and behind-the-scenes systems of payola, blackmail, and graft, while the powerless among the people live with the results. This much we have learned and cannot unlearn.

That is precisely the problem that cries out for a solution. The solution of elections seems reasonable only if the people we elected actually have the authority over the thing they seek to reform.

There are criticisms of the idea of executive control of executive agencies, which is really nothing other than the system the Founders established.

First, conceding more power to the president raises fears that he will behave like a dictator, a fear that is legitimate. Partisan supporters of Trump won’t be happy when the precedent is cited to reverse Trump’s political priorities and the agencies turn on red-state voters in revenge.

That problem is solved by dismantling agency power itself, which, interestingly, is mostly what Trump’s executive orders have sought to achieve and which the courts and media have worked to stop.

Second, one worries about the return of the “spoils system,” the supposedly corrupt system by which the president hands out favors to friends in the form of emoluments, a practice the establishment of the civil service was supposed to stop.

In reality, the new system of the early 20th century fixed nothing but only added another layer, a permanent ruling class to participate more fully in a new type of spoils system that operated now under the cloak of science and efficiency.

Honestly, can we really compare the petty thievery of Tammany Hall to the global depredations of USAID?

Third, it is said that presidential control of agencies threatens to erode checks and balances. The obvious response is the organizational chart above. That happened long ago as Congress created and funded agency after agency from the Wilson to the Biden administration, all under executive control.

Congress perhaps wanted the administrative state to be an unannounced and unaccountable fourth branch, but nothing in the founding documents created or imagined such a thing.

If you are worried about being dominated and destroyed by a ravenous beast, the best approach is not to adopt one, feed it to adulthood, train it to attack and eat people, and then unleash it.

The Covid years taught us to fear the power of the agencies and those who control them not just nationally but globally. The question now is two-fold: what can be done about it and how to get from here to there?

Trump’s executive order on the Department of Education illustrates the point precisely. His administration is so uncertain of what it does and can control, even of agencies that are wholly executive agencies, listed clearly under the heading of executive agencies, that it has to dodge and weave practical and legal barriers and land mines, even in its own supposed executive pronouncements, even to urge what might amount to be minor reforms.

Whoever is in charge of such a system, it is clearly not the people.

Brownstone Institute

The New Enthusiasm for Slaughter

From the Brownstone Institute

By

What War Means

My mother once told me how my father still woke up screaming in the night years after I was born, decades after the Second World War (WWII) ended. I had not known – probably like most children of those who fought. For him, it was visions of his friends going down in burning aircraft – other bombers of his squadron off north Australia – and to be helpless, watching, as they burnt and fell. Few born after that war could really appreciate what their fathers, and mothers, went through.

Early in the movie Saving Private Ryan, there is an extended D-Day scene of the front doors of the landing craft opening on the Normandy beaches, and all those inside being torn apart by bullets. It happens to one landing craft after another. Bankers, teachers, students, and farmers being ripped in pieces and their guts spilling out whilst they, still alive, call for help that cannot come. That is what happens when a machine gun opens up through the open door of a landing craft, or an armored personnel carrier, of a group sent to secure a tree line.

It is what a lot of politicians are calling for now.

People with shares in the arms industry become a little richer every time one of those shells is fired and has to be replaced. They gain financially, and often politically, from bodies being ripped open. This is what we call war. It is increasingly popular as a political strategy, though generally for others and the children of others.

Of course, the effects of war go beyond the dismembering and lonely death of many of those fighting. Massacres of civilians and rape of women can become common, as brutality enables humans to be seen as unwanted objects. If all this sounds abstract, apply it to your loved ones and think what that would mean.

I believe there can be just wars, and this is not a discussion about the evil of war, or who is right or wrong in current wars. Just a recognition that war is something worth avoiding, despite its apparent popularity amongst many leaders and our media.

The EU Reverses Its Focus

When the Brexit vote determined that Britain would leave the European Union (EU), I, like many, despaired. We should learn from history, and the EU’s existence had coincided with the longest period of peace between Western European States in well over 2,000 years.

Leaving the EU seemed to be risking this success. Surely, it is better to work together, to talk and cooperate with old enemies, in a constructive way? The media, and the political left, center, and much of the right seemed at that time, all of nine years ago, to agree. Or so the story went.

We now face a new reality as the EU leadership scrambles to justify continuing a war. Not only continuing, but they had been staunchly refusing to even countenance discussion on ending the killing. It has taken a new regime from across the ocean, a subject of European mockery, to do that.

In Europe, and in parts of American politics, something is going on that is very different from the question of whether current wars are just or unjust. It is an apparent belief that advocacy for continued war is virtuous. Talking to leaders of an opposing country in a war that is killing Europeans by the tens of thousands has been seen as traitorous. Those proposing to view the issues from both sides are somehow “far right.”

The EU, once intended as an instrument to end war, now has a European rearmament strategy. The irony seems lost on both its leaders and its media. Arguments such as “peace through strength” are pathetic when accompanied by censorship, propaganda, and a refusal to talk.

As US Vice-President JD Vance recently asked European leaders, what values are they actually defending?

Europe’s Need for Outside Help

A lack of experience of war does not seem sufficient to explain the current enthusiasm to continue them. Architects of WWII in Europe had certainly experienced the carnage of the First World War. Apart from the financial incentives that human slaughter can bring, there are also political ideologies that enable the mass death of others to be turned into an abstract and even positive idea.

Those dying must be seen to be from a different class, of different intelligence, or otherwise justifiable fodder to feed the cause of the Rules-Based Order or whatever other slogan can distinguish an ‘us’ from a ‘them’…While the current incarnation seems more of a class thing than a geographical or nationalistic one, European history is ripe with variations of both.

Europe appears to be back where it used to be, the aristocracy burning the serfs when not visiting each other’s clubs. Shallow thinking has the day, and the media have adapted themselves accordingly. Democracy means ensuring that only the right people get into power.

Dismembered European corpses and terrorized children are just part of maintaining this ideological purity. War is acceptable once more. Let’s hope such leaders and ideologies can be sidelined by those beyond Europe who are willing to give peace a chance.

There is no virtue in the promotion of mass death. Europe, with its leadership, will benefit from outside help and basic education. It would benefit even further from leadership that values the lives of its people.

-

2025 Federal Election2 days ago

2025 Federal Election2 days agoRCMP Whistleblowers Accuse Members of Mark Carney’s Inner Circle of Security Breaches and Surveillance

-

Also Interesting2 days ago

Also Interesting2 days agoBetFury Review: Is It the Best Crypto Casino?

-

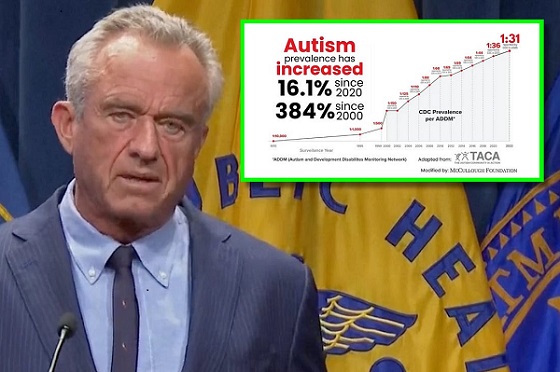

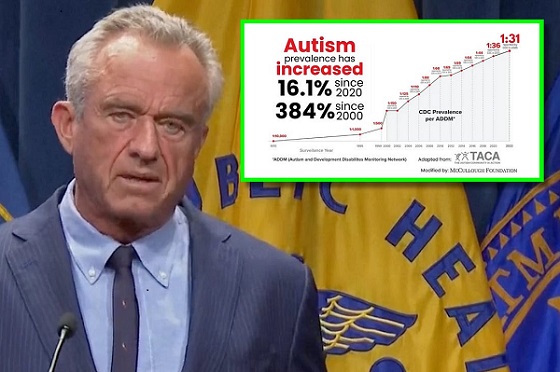

Autism2 days ago

Autism2 days agoRFK Jr. Exposes a Chilling New Autism Reality

-

COVID-192 days ago

COVID-192 days agoCanadian student denied religious exemption for COVID jab takes tech school to court

-

2025 Federal Election2 days ago

2025 Federal Election2 days agoBureau Exclusive: Chinese Election Interference Network Tied to Senate Breach Investigation

-

International2 days ago

International2 days agoUK Supreme Court rules ‘woman’ means biological female

-

2025 Federal Election2 days ago

2025 Federal Election2 days agoNeil Young + Carney / Freedom Bros

-

Health2 days ago

Health2 days agoWHO member states agree on draft of ‘pandemic treaty’ that could be adopted in May